Multiphysics for an SFR Pincell

In this tutorial, you will learn how to:

Couple OpenMC, NekRS, and MOOSE for modeling an Sodium Fast Reactor (SFR) pincell

Use MOOSE's reactor module to make meshes for common reactor geometries

Use subcycling to efficiently allocate computational resources

To access this tutorial,

Geometry and Computational Model

The geometry consists of a single pincell, cooled by sodium. The dimensions and assumed operating conditions are summarized in Table 1. Note that these conditions are not necessarily representative of full-power nuclear reactor conditions, since we elect laminar flow conditions in order to reduce meshing requirements for the sake of a fast-running tutorial. By using laminar flow, we will also use a much lower power density than seen in prototypical systems - but for the sake of a tutorial, all the essential components of multiphysics are present (coupling via power, temperature, and density). In addition, to keep the computational requirements as low as possible for NekRS, the height is only 50 cm (so the eignevalue predicted by OpenMC will also be very low, due to high axial leakage).

Table 1: Geometric and operating specifications for a pincell

| Parameter | Value |

|---|---|

| Fuel pellet diameter, | 0.825 cm |

| Clad outer diameter, | 0.97 cm |

| Pin pitch, | 1.28 cm |

| Height | 50.0 cm |

| Reynolds number | 500.0 |

| Prandtl number | 0.00436 |

| Inlet temperature | 573 K |

| Outlet temperature | 708 K |

| Power | 250 W |

We will couple OpenMC, NekRS, and MOOSE heat conduction in a "single stack" hierarchy, with OpenMC as the main application, MOOSE as the second-level application, and NekRS as the third-level application. This structure is shown in Figure 1, and will allow us to sub-cycle all three codes with respect to one another. Solid black lines indicate data transfers that occur directly from application to application , while the dashed black lines indicate data transfers that first have to pass through an "intermediate" application.

Figure 1: "Single stack" multiapp hierarchy used in this tutorial.

Like with all Cardinal simulations, Picard iterations are achieved "in time." We have not expounded greatly upon this notion in previous tutorials, so we dedicate some space here. The overall Cardinal simulation has a notion of "time" and a time step index, because all code applications will use a Transient executioner. However, usually only NekRS is solved with non-zero time derivatives. The notion of time-stepping is then used to customize how frequently (i.e., in units of time steps) data is exchanged. To help explain the strategy, represent the time step sizes in NekRS, MOOSE, and OpenMC as , , and , respectively. Selecting and/or is referred to as "sub-cycling." In other words, NekRS runs times for each MOOSE solve, while MOOSE runs times for each OpenMC solve, effectively reducing the total number of MOOSE solves by a factor of and the total number of OpenMC solves by a factor of compared to the naive approach to exchange data based on the smallest time step across the coupled codes. We can then also reduce the total number of data transfers by only transferring data when needed. Each Picard iteration consists of:

Run an OpenMC -eigenvalue calculation. Transfer the heat source to MOOSE.

Repeat times:

Run a steady-state MOOSE calculation. Transfer the heat flux to NekRS.

Run a transient NekRS calculation for time steps. Transfer the wall temperature to MOOSE.

Transfer the solid temperature , the fluid temperature , and the fluid density to OpenMC.

Figure 2 shows the procedure for an example selection of , meaning that the MOOSE-NekRS sub-solve occurs three times for every OpenMC solve.

Figure 2: Coupling procedure with subcycling for a calculation with OpenMC, MOOSE, and NekRS

In this tutorial, we will use different "time steps" for OpenMC, MOOSE, and NekRS, and we invite you at the end of the tutorial to explore the impact on changing these time step magnitudes to see how you can control the frequency of data transfers.

OpenMC Model

OpenMC is used to solve for the neutron transport and power distribution. The OpenMC model is created using OpenMC's Python API. We will build this geometry using CSG and divided the pincell into a number of axial layers. On each layer, we will apply temperature and density feedback from the coupled MOOSE-NekRS simulation.

First, we create a number of materials to represent the fuel pellet and cladding. Because these materials will only receive temperature updates (i.e. we will not change their density, because doing so would require us to move cell boundaries in order to preserve mass), we can simply create one material that we will use on all axial layers. Next, we create a number of materials to represent the sodium material in each axial layer of the model. Because the sodium cells will change both their temperature and density, we need to create a unique material for each axial layer.

Next, we divide the geometry into a number of axial layers, where on each layer we set up cells to represent the pellet, cladding, and sodium. Each layer is then described by lying between two -planes. The boundary condition on the top and bottom faces of the OpenMC model are set to vacuum. Finally, we declare a number of settings related to the initial fission source (uniform over the fissionale regions) and how temperature feedback is applied, and then export all files to XML.

(tutorials/pincell_multiphysics/pincell.py)You can create these XML files by running

Heat Conduction Model

The MOOSE heat transfer module is used to solve for energy conservation in the solid. The solid mesh is shown in Figure 3. This mesh is generated using MOOSE's reactor module, which can be used to make sophisticated meshes of typical reactor geometries such as pin lattices, ducts, and reactor vessels. In this example, we use this module to set up just a single pincell.

Figure 3: Solid mesh

This mesh is described in the solid.i file,

You can generate this mesh by running

which will generate the mesh as the solid_in.e file. You will note in Figure 3 that this mesh actually also includes mesh for the fluid region. In Figure 1, we need a mesh region to "receive" the fluid temperature and density from NekRS, because NekRS cannot directly communicate with OpenMC by skipping the second-level app (MOOSE). Therefore, no solve will occur on this fluid mesh, it simply exists to receive the fluid solution from NekRS before being passed "upwards" to OpenMC. By having this dummy "receiving-only" mesh in the solid application, we will require a few extra steps when we set up the MOOSE heat conduction model in Solid Input Files .

NekRS Model

NekRS is used to solve the incompressible Navier-Stokes equations in non-dimensional form. The NekRS input files needed to solve the incompressible Navier-Stokes equations are:

fluid.re2: NekRS mesh (generated byexo2nekstarting from MOOSE-generated meshes, to be discussed shortly)fluid.par: High-level settings for the solver, boundary condition mappings to sidesets, and the equations to solvefluid.udf: User-defined C++ functions for on-line postprocessing and model setupfluid.oudf: User-defined OCCA kernels for boundary conditions and source terms

A detailed description of all of the available parameters, settings, and use cases for these input files is available on the NekRS documentation website. Because the purpose of this analysis is to demonstrate Cardinal's capabilities, only the aspects of NekRS required to understand the present case will be covered.

We first create the mesh using MOOSE's reactor module. The syntax used to build a HEX27 fluid mesh is shown below. A major difference from the dummy fluid receiving portion of the solid mesh is that we now set up some boundary layers on the pincell surfaces, by providing ring_radii (and other parameters) as vectors.

However, NekRS requires a HEX20 mesh format. Therefore, we use a NekMeshGenerator to convert from HEX27 to HEX20 format, while also moving the higher-order side nodes on the pincell surface to match the curvilinear elements. The syntax used to convert from the HEX27 fluid mesh to a HEX20 fluid mesh, while preserving the pincell surface, is shown below.

(tutorials/pincell_multiphysics/convert.i)To generate the meshes, we run:

and then use the exo2nek utility to convert from the exodus file format (convert.exo) into the custom .re2 format required for NekRS. A depiction of the outputs of the two stages of the mesh generator process are shown in Figure 4. Boundary 2 is the inlet, boundary 3 is the outlet, and boundary 1 is the pincell surface. Boundaries 4 through 7 are the lateral faces of the pincell.

Figure 4: Outputs of the mesh generators used for the NekRS flow domain

Next, the .par file contains problem setup information. This input solves for pressure, velocity, and temperature. In the nondimensional formulation, the "viscosity" becomes , where is the Reynolds number, while the "thermal conductivity" becomes , where is the Peclet number. These nondimensional numbers are used to set various diffusion coefficients in the governing equations with syntax like -500.0, which is equivalent in NekRS syntax to . On the four lateral faces of the pincell, we set symmetry conditions for velocity.

Next, the .udf file is used to set up initial conditions for velocity, pressure, and temperature. We set uniform axial velocity initial conditions, and temperature to a linear variation from 0 at the inlet to 1 at the outlet.

In the .oudf file, we define boundary conditions. On the flux boundary, we will be sending a heat flux from MOOSE into NekRS, so we set the flux equal to the scratch space array, bc->usrwrk[bc->idM].

Multiphysics Coupling

In this section, OpenMC, NekRS, and MOOSE are coupled for multiphysics modeling of an SFR pincell. This section describes all input files.

OpenMC Input Files

The neutron transport is solved using OpenMC. The input file for this portion of the physics is openmc.i. We begin by defining a number of constants and setting up the mesh mirror. For simplicity, we just use the same mesh as used in the solid application, shown in Figure 3.

Next, we define a number of auxiliary variables to be used for diagnostic purposes. With the exception of the FluidDensityAux, none of the following variables are necessary for coupling, but they will allow us to visualize how data is mapped from OpenMC to the mesh mirror. The FluidDensityAux auxiliary kernel on the other hand is used to compute the fluid density, given the temperature variable temp (into which we will write the MOOSE and NekRS temperatures, into different regions of space). Note that we will not send fluid density from NekRS to OpenMC, because the NekRS model uses an incompressible Navier-Stokes model. But to a decent approximation, the fluid density can be approximated solely as a function of temperature using the SodiumSaturationFluidProperties (so named because these properties represent sodium properties at saturation temperature).

Next, the [Problem] and [Tallies] blocks define all the parameters related to coupling OpenMC to MOOSE. We will send temperature to OpenMC from blocks 2 and 3 (which represent the solid regions) and we will send temperature and density to OpenMC from block 1 (which represents the fluid region). We add a CellTally to tally the heat source in the fuel.

For this problem, the temperature that gets mapped into OpenMC is sourced from two different applications, which we can customize using the temperature_variables and temperature_blocks parameters. Later in the Transfers block, all data transfers from the sub-applications will write into either solid_temp or nek_temp.

Finally, we provide some additional specifications for how to run OpenMC. We set the number of batches and various triggers that will automatically terminate the OpenMC solution when reaching less than 2% maximum relative uncertainty in the fission power taly and 7.5E-4 standard deviation in .

(tutorials/pincell_multiphysics/openmc.i)Next, we set up some initial conditions for the various fields used for coupling.

(tutorials/pincell_multiphysics/openmc.i)Next, we create a MOOSE heat conduction sub-application, and set up transfers of data between OpenMC and MOOSE. These transfers will send solid temperature and fluid temperature from MOOSE up to OpenMC, and a power distribution to MOOSE.

(tutorials/pincell_multiphysics/openmc.i)Finally, we will use a Transient executioner and add a number of postprocessors for diagnostic purposes.

(tutorials/pincell_multiphysics/openmc.i)Solid Input Files

The conservation of solid energy is solved using the MOOSE heat transfer module. The input file for this portion of the physics is bison.i. We begin by defining a number of constants and setting the mesh.

Because some blocks in the solid mesh aren't actually used in the solid solve (i.e. the fluid block we will use to receive fluid temperature and density from NekRS), we need to explicitly tell MOOSE to not throw errors related to checking for material properties on every mesh block.

(tutorials/pincell_multiphysics/bison.i)Next, we set up a nonlinear variable T to represent solid temperature and create kernels representing heat conduction with a volumetric heating in the pellet region. In the fluid region, we need to use a NullKernel to indicate that no actual solve happens on this block. On the cladding surface, we will impose a Dirichlet boundary condition given the NekRS fluid temperature. Finally, we set up material properties for the solid blocks using HeatConductionMaterial.

Next, we declare auxiliary variables to be used for:

coupling to NekRS (to receive the NekRS temperature,

nek_temp)computing the pin surface heat flux (to send heat flux

fluxto NekRS)powerto represent the volumetric heating received from OpenMC

On each MOOSE-NekRS substep, we will run MOOSE first. For the very first time step, this means we should set an initial condition for the NekRS fluid temperature, which we simply set to a linear function of height. Finally, we create a DiffusionFluxAux to compute the heat flux on the pin surface.

(tutorials/pincell_multiphysics/bison.i)Next, we create a NekRS sub-application and set up the data transfers between MOOSE and NekRS. There are four transfers - three are related to sending the temperature and heat flux between MOOSE and NekRS, and the fourth is related to a performance optimization in the NekRS wrapping that will only interpolate data to/from NekRS at the synchronization points with the application "driving" NekRS (in this case, MOOSE heat conduction).

(tutorials/pincell_multiphysics/bison.i)Next, we set up a number of postprocessors, both for normalizing the total power sent from MOOSE to NekRS, as well as a few diagnostic terms.

(tutorials/pincell_multiphysics/bison.i)Finally, we set up the MOOSE heat conduction solver to use a Transient exeuctioner and specify the output format. Important to note here is that a user-provided choice of M will determine how many NekRS time steps are run for each MOOSE time step.

Fluid Input Files

The fluid mass, momentum, and energy transport physics are solved using NekRS. The input file for this portion of the physics is nek.i. We begin by defining a number of constants and by setting up the NekRSMesh mesh mirror. Because we are coupling NekRS via boundary heat transfer to MOOSE, but via volumetric temperature and densities to OpenMC, we need to use a combined boundary and volumetric mesh mirror, so both boundary and volume = true are provided. Because this problem is set up in non-dimensional form, we also need to rescale the mesh to match the units expected by our solid and OpenMC input files.

The bulk of the NekRS wrapping is specified with NekRSProblem. The NekRS input files are in non-dimensional form, whereas all other coupled applications use dimensional units. The various *_ref and *_0 parameters define the characteristic scales that were used to non-dimensionalize the NekRS input. We add a NekBoundaryFlux to pass heat flux into NekRS, and a NekFieldVariable to read temperature out.

Then, we simply set up a Transient executioner with the NekTimeStepper. For postprocessing purposes, we also create two postprocessors to evaluate the average outlet temperature and the maximum fluid temperature. Finally, in order to not overly saturate the screen output, we will only write the Exodus output files and print to the console every 10 Nek time steps.

(tutorials/pincell_multiphysics/nek.i)Execution

To run the input files,

This will produce a number of output files,

openmc_out.e, OpenMC simulation results, mapped to a mesh mirroropenmc_out_bison0.e, MOOSE heat conduction simulation resultsopenmc_out_bison0_nek0.e, NekRS simulation results, mapped to a mesh mirrorfluid0.f*, NekRS output files

Figure 5 shows the temperature (a) imposed in OpenMC (the output of the CellTemperatureAux) auxiliary kernel; (b) the NekRS fluid temperature; and (c) the MOOSE solid temperature.

Figure 5: Temperatures predicted by NekRS and BISON (middle, right), and what is imposed in OpenMC (left). All images are shown on the same color scale.

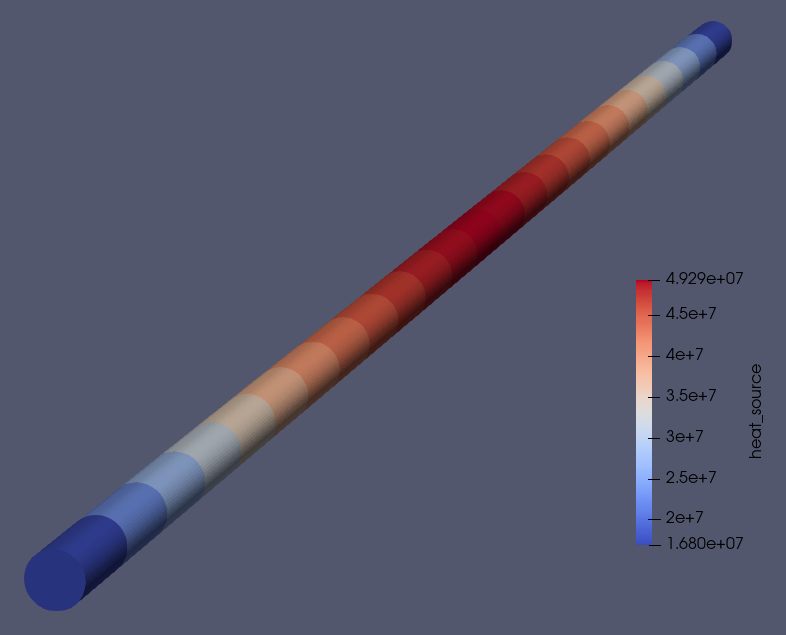

The OpenMC power distribution is shown in Figure 6. The small variations of sodium density with temperature cause the power distribution to be fairly symmetric in the axial direction.

Figure 6: OpenMC predicted power distribution

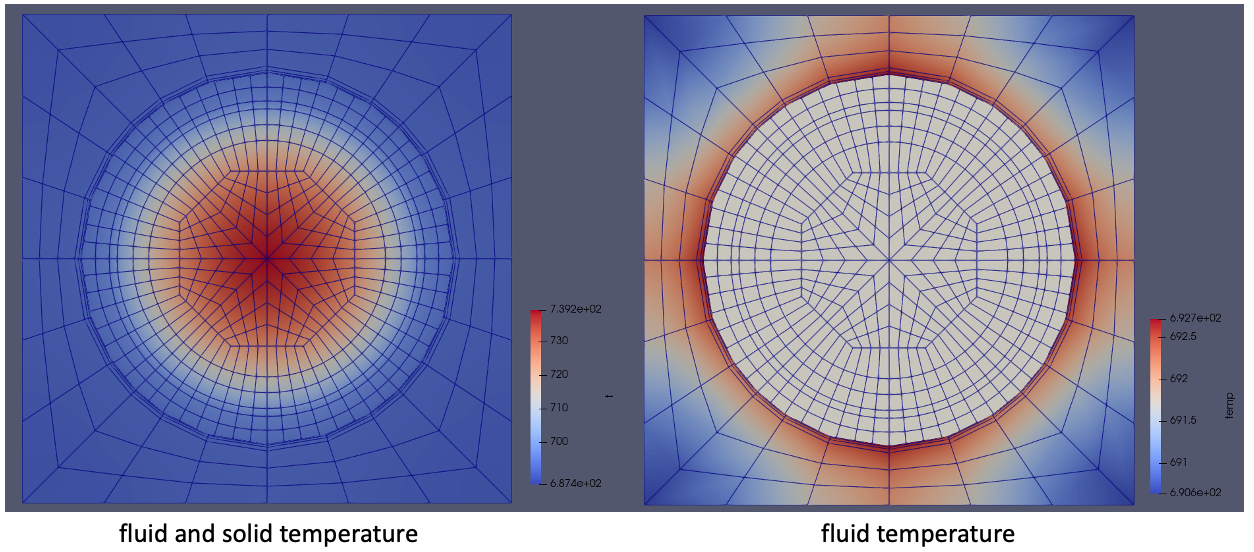

Finally, Figure 7 shows the fluid and solid temperatures (a) with both phases shown and (b) with just the fluid region highlighted. The very lower power in this demonstration results in fairly small temperature gradients in the fuel, but what is important to note is the coupled solution captures typical conjugate heat transfer temperature distributions in rectangular pincells. You can always extend this tutorial to turbulent conditions and increase the power to display temperature distributions more characteristic of real nuclear systems.

Figure 7: Temperatures predicted by NekRS and BISON on the axial midplane.

In this tutorial, OpenMC, MOOSE, and NekRS each used their own time step. You may want to try re-running this tutorial using different time step sizes in the OpenMC and MOOSE heat conduction input files, to explore how to sub-cycle these codes together.