- casenameCase name for the NekRS input files; this is

in .par, .udf, .oudf, and .re2. C++ Type:std::string

Unit:(no unit assumed)

Controllable:No

Description:Case name for the NekRS input files; this is

in .par, .udf, .oudf, and .re2.

NekRSProblem

This class performs all activities related to solving NekRS as a MOOSE application. This class also facilitates data transfers to/from MooseVariables in order to exchange field data between NekRS and MOOSE. This class allows NekRS to be coupled to MOOSE in several different manners:

Boundary coupling via Conjugate Heat Transfer (CHT),

Volume coupling via temperature and heat source feedback, or a

Combination of the above

This class must be used in conjunction with two other classes in Cardinal:

NekRSMesh, which builds a mirror of the NekRS mesh in a MOOSE format so that all the usual Transfers understand how to send data into/out of NekRS. The settings on NekRSMesh also determine which coupling type (listed above) is used.

NekTimeStepper, which allows NekRS to control its own time stepping.

Therefore, we recommend first reading the documentation for the above classes before proceeding here.

The smallest possible MOOSE-wrapped input file that can be used to run NekRS is shown below. casename is the prefix describing the NekRS input files, i.e. this parameter would be casename = 'fluid' if the NekRS input files are fluid.re2, fluid.par, fluid.udf, and fluid.oudf.

Listing 1: Smallest possible NekRS wrapped input file.

(doc/content/source/problems/smallest_input.i)The remainder of this page describes how NekRSProblem wraps NekRS as a MOOSE application.

Initializing MOOSE Fields

When initializing a coupling of NekRS within the MOOSE framework, the first action taken by NekRSProblem is to initialize MOOSE-type variables (MooseVariables) and Postprocessors needed to communicate NekRS's solution with a general MOOSE application.

First, NekRSProblem initializes MooseVariables to receive and write spatial data necessary for multiphysics coupling. Depending on the settings for this class, the following variables will be added:

temp, the NekRS temperature to be sent to MOOSE (this is created for both boundary and volume coupling)avg_flux, the MOOSE surface heat flux to be sent to NekRS (this is created for boundary coupling)heat_source, the MOOSE heat source to be sent to NekRS (this is created for volume coupling)

Here, "boundary coupling" refers to the case when the boundary parameter is specified for the NekRSMesh, while "volume coupling" refers to the case when volume = true is specified for the NekRSMesh. The polynomial order of each of the variables listed above is set to match the order selected in the NekRSMesh.

This initialization of MOOSE variables happens "behind the scenes" - for instance, in the Nek-wrapped input file in Listing 1, we have indicated that we are going to be coupling NekRS through both boundary CHT and volumetric heat sources (because we set volume = true and provided boundary). Therefore, NekRSProblem essentially adds the following to the input file:

This auxiliary variable addition happens automatically in order to simplify the input file creation, so you don't need to add these variables yourself. In addition to these auxiliary variables, NekRSProblem also automatically adds several Receiver postprocessors that are used for ensuring conservation in the data transfers:

flux_integral, or the total integrated heat flux to be conserved from the coupled MOOSE application (boundary coupling)source_integral, or the total integrated heat source (volumetric) to be conserved from the coupled MOOSE application (volume coupling)

Therefore, the second thing that NekRSProblem does is to essentially add the following to the input file:

Overall Calculation Methodology

NekRSProblem inherits from the ExternalProblem class. For each time step, the calculation proceeds according to the ExternalProblem::solve() function. Data gets sent into NekRS, NekRS runs a time step, and data gets extracted from NekRS. NekRSProblem mostly consists of defining the syncSolutions and externalSolve methods. Each of these functions is now described.

External Solve

The actual solve of a timestep by NekRS is peformed within the externalSolve method, which essentially performs the following.

These four functions are defined in the NekRS source code, and perform the following:

Run a single time step

Copy the device-side solution to the host-side (for access in various MOOSE-style postprocessors)

Execute a user-defined function in NekRS,

UDF_ExecuteStep, for Nek-style postprocessingWrite a NekRS output file

Because externalSolve is wrapped inside two syncSolutions calls, this means that for every NekRS time step, data is sent to and from NekRS, even if NekRS runs with a smaller time step than the MOOSE application to which it is coupled (i.e. if the data going into NekRS hasn't changed since the last time it was sent to NekRS). A means by which to reduce some of these (technically) unnecessary data transfers is described in Reducing CPU/GPU Data Transfers .

Transfers to NekRS

In the TO_EXTERNAL_APP data transfer, MooseVariables are read from the NekRSMesh mesh mirror and interpolated onto the NekRS Gauss-Lobatto-Legendre (GLL) points corresponding to each node using Vandermonde matrices. The specific data transfers going into NekRS are determined based on how the NekRSMesh was constructed, i.e. whether boundary and/or volume coupling is used.

Data is "sent" into NekRS by writing into the nrs->usrwrk scratch space array, which NekRS makes available within the boundary condition functions in the .oudf file (on device, this array is technically called the nrs->o_usrwrk array). Table 1 shows the assignment of "slots" in the nrs->usrwrk scratch space array with quantities written by Cardinal. Because different quantities are written into Cardinal depending on the problem setup, if a particular slice is not needed for a case, it will be skipped. That is, the order of the various quantities is always the same in nrs->usrwrk, but with any unused slots cleared out.

Table 1: Quantities written into the scratch space array by Cardinal. In the last column, n indicates that the value is case-dependent depending on what features you have enabled.

| Slice | Quantity | When Will It Be Created? | How to Access in the .oudf File |

|---|---|---|---|

| 0 | Boundary heat flux | if boundary is set on NekRSMesh | bc->usrwrk[n * bc->fieldOffset + bc->idM] |

| 1 | Volumetric heat source | if volume is true on NekRSMesh | bc->usrwrk[n * bc->fieldOffset + bc->idM] |

| 2 | Mesh x-displacement | if moving_mesh is true on NekRSMesh | bc->usrwrk[n * bc->fieldOffset + bc->idM] |

| 3 | Mesh y-displacement | if moving_mesh is true on NekRSMesh | bc->usrwrk[n * bc->fieldOffset + bc->idM] |

| 4 | Mesh z-displacement | if moving_mesh is true on NekRSMesh | bc->usrwrk[n * bc->fieldOffset + bc->idM] |

The total number of slots in the scratch space that are allocated by Cardinal is controlled with the n_usrwrk_slots parameter. If you need to use extra slices in nrs->usrwrk for other custom user actions, simply set n_usrwrk_slots to be greater than the number of slots strictly needed for coupling. At the start of your Cardinal simulation, a table will be printed to the screen to explicitly tell you what each slice in the scratch space holds. Any extra slots are noted as unused, and are free for non-coupling use.

For example, if your case couples NekRS to MOOSE via volumes by setting volume = true, but has boundary unset and moving_mesh = false, the slice normally dedicated to storing heat flux is skipped. A table similar to the following would print out at the start of your simulation. You could use slices 1 onwards for custom purposes.

Listing 2: Table printed at start of Cardinal simulation that describes available scratch space for a case that couples NekRS to MOOSE via volumetric power density, but not via boundaries or a moving mesh. A total of 7 slots are allocated by setting n_usrwrk_slots to 7

Allocation of nrs->usrwrk and nrs->o_usrwrk is done automatically. If you attempt to run a NekRS input file that accesses bc->usrwrk in the .oudf file without a Cardinal executable (e.g. like nrsmpi case 4), then that scratch space will have to be manually allocated in the .udf file, or else your input will seg fault. This will not be typically encountered by most users, but if you really do want to run the NekRS input files intended for a Cardinal case with the NekRS executable (perhaps for debugging), we recommend simply replacing bc->usrwrk by a dummy value, such as bc->flux = 0.0 for the boundary heat flux use case. This just replaces a value that normally comes from MOOSE by a fixed value. All other aspects of the NekRS case files should not require modification.

Boundary Transfers

If boundary was specified on NekRSMesh, then a heat flux (stored in the variable avg_flux) and the total heat flux integral over the boundary for normalization (stored in the postprocessor flux_integral) are sent to NekRS. Then, all that is required to use a heat flux transferred by MOOSE is to apply it in the scalarNeumannConditions boundary condition. Below, bc->usrwrk is the same as nrs->o_usrwrk, or the scratch space on the device; this function applies the heat flux computed by MOOSE to the flux boundaries.

Volume Transfers

If volume = true is specified on NekRSMesh, then a volumetric heat source (stored in the variable heat_source) and the total heat source integral over the volume for normalization (stored in the postprocessor source_integral) are sent to NekRS. Then, all that is required to use a volumetric heat source transferred by MOOSE is to apply it with a custom source kernel in the .oudf file. Below is an example of a custom heat source kernel, arbitrarily named mooseHeatSource - this code is executed on the Graphics Processing Unit (GPU), and is written in OKL, a decorated C++ kernel language. This code loops over all the NekRS elements, loops over all the GLL points on each element, and then sets the volumetric heat source equal to a heat source passed into the mooseHeatSource function.

The actual passing of the nrs->usrwrk scratch space (that NekRSProblem writes into) occurs in the .udf file. In the .udf file, you need to define a custom function, named artbirarily here to userq, with a signature that matches the user-defined source function expected by NekRS. This function should then pass the scratch space into the Open Concurrent Compute Abstraction (OCCA) kernel we saw in the .oudf file. Note that the "offset" here should match the slice in the scratch space array which holds the volumetric heat source.

Finally, be sure to "load" the custom heat source kernel and create the pointer needed by NekRS to call that function. These two extra steps are quite small - the entire .udf file required to apply a MOOSE heat source is shown below (a large part of this file is applying initial conditions, which is unrelated to the custom heat source).

If your case involves applying a heat source to NekRS from MOOSE, you can simply copy the OCCA kernel and .udf file build/load commands for your case - no modifications should be required. If needed, please consult the NekRS documentation for more information on applying custom sources.

If NekRSMesh has both boundary and volume coupling specified, then both the boundary and volume data transfers will occur - a volume mesh is constructed that communciates a volumetric heat source, a volumetric temperature, and a boundary heat flux. In NekRS's .oudf file, you will apply a heat flux in the scalarNeumannConditions function and a volumetric heat source in a source OCCA kernel.

Transfer from NekRS

In the FROM_EXTERNAL_APP data transfer, MooseVariables are written to the NekRSMesh by interpolating from NekRS's GLL points using Vandermonde matrices. The data transfers coming from NekRS are determined based on how the NekRSMesh was constructed, i.e. whether boundary and/or volume coupling is used.

Boundary Transfers

If boundary was specified on NekRSMesh, then a temperature (stored in the variable temp) is written by NekRS by reading from the corresponding boundary in the array storing the passive scalars in NekRS, or nrs->cds->S.

Volume Transfers

If volume = true was specified on NekRSMesh, then the temperature (stored in the variable temp) is written by NekRS by reading from the entire volume in the array storing the passive scalars in NekRS, or nrs->cds->S. In other words, the boundary and volume transfers are basically the same coming from NekRS - the temperature is written into the temp variable. The entire volume data is written for volume transfers, whereas only the temperature on the specified boundaries is written for boundary transfers.

What are the Fluxes?

There are a few different heat fluxes involved when coupling NekRS via CHT to MOOSE. When you run a CHT calculation, for each time step you will see something like the following printed to the screen:

Here, the "total NekRS flux of ..." is the integral of nrs->usrwrk over the high-order NekRS spectral element mesh. Conversely, the "conserved MOOSE value of ..." is the total heat flux that MOOSE is setting in NekRS (which you are transferring via a postprocessor to the flux_integral postprocessor in the NekRS MOOSE-wrapped input file). These two numbers will be different unless all of the following are true:

The NekRS and MOOSE meshes are identical

The NekRS polynomial order matches the MOOSE polynomial order, and for the same polynomial order the nodes are the same. Because NekRS is a spectral element method and MOOSE uses the finite element method, this criteria can only occur if NekRS and MOOSE are either 1st or 2nd order (because the node placement for 1st or 2nd order elements is the same for spectral and finite elements).

The quadrature rule used to integrate in MOOSE is the GLL quadrature

Any differences between the "total NekRS flux" and the "conserved MOOSE value" will simply arise due to differences in the integration of a field over their respective meshes. You may see large differences if the geometry is curved, since the NekRS high-order mesh will capture the curvature and result in very different area integrals. We always recommend doing a sanity check on the flux sent from MOOSE to NekRS via the MOOSE output files.

You can also monitor the heat flux in NekRS by computing using a NekHeatFluxIntegral postprocessor. In general, the quantity computed by this postprocessor will not match the heat flux set by MOOSE because both the finite and spectral element methods solve weak forms where Neumann boundary conditions are only weakly imposed. That is, we set the heat flux but only enforce it by driving the entire nonlinear residual to a small-enough number, so heat flux boundary conditions are never perfectly observed like Dirichlet boundary conditions are.

What Order Does Everything Happen in?

It can be helpful to understand exactly the order in which different data transfers and other operations occur within the NekRS-MOOSE wrapping. For every time step of NekRS, we call this function:

(contrib/moose/framework/src/problems/ExternalProblem.C)A detailed breakdown of each of these three basic steps is provided below:

Other Features

This class mainly facilitates data transfers to and from NekRS. A number of other features are implemented in order to enable nondimensional solutions, improved communication, and convenient solution modifications. These are described in this section.

Nondimensional Solution

NekRS is most often solved in nondimensional form, such that all solution variables are of order unity by normalizing by problem-specific characteristic scales. However, most other MOOSE applications use dimensional units. When transferring field data to/from NekRS or when postprocessing the NekRS solution, it is important for the NekRS solution to match the dimensional solution of the coupled MOOSE application. For physical intuition, it is also often helpful in many cases to visualize and interpret a NekRS solution in dimensional form. Cardinal automatically handles conversions between dimensional and non-dimensional form if you add a Dimensionalize sub-block. Please consult the documentation for Dimensionalize for more information.

Outputting the NekRS Solution

This class enables extracting a number of fields from the NekRS solution onto the NekRSMesh mesh mirror in addition to any fields that are already interpolated onto the mesh mirror for purposes of multiphysics coupling to another MOOSE application. This feature can be used for:

Quick visualization of the NekRS solution without needing to rely on NekRS's custom output format

Postprocessing the NekRS solution using MOOSE's systems

Providing one-way coupling to other MOOSE applications, such as for transporting scalars based on NekRS's velocity solution or for projecting NekRS turbulent viscosity closure terms onto another MOOSE application's mesh

This output feature is used by specifying the fields to be output with the output parameter. Available output fields include:

pressure(which creates a MOOSE variable namedP)velocity(which creates MOOSE variables namedvel_x,vel_y, andvel_z)temperature(which creates a MOOSE variable namedtemp)scalar01(which creates a MOOSE variable namedscalar01)scalar02(which creates a MOOSE variable namedscalar02)scalar03(which creates a MOOSE variable namedscalar03)

For NekRS simulations that are coupled to MOOSE, the temperature will already be output because it is used as part of the physics transfers.

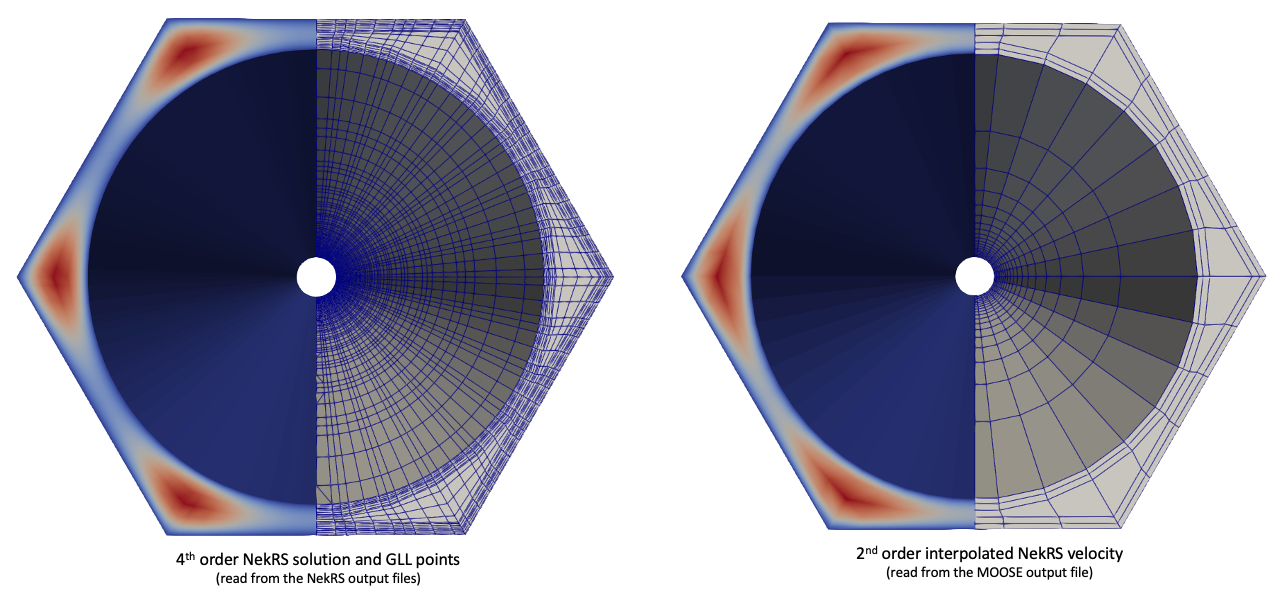

When outputting data, the NekRS solution fields are interpolated from the GLL points onto the NekRSMesh, which will be either a first or second order representation of the NekRS mesh. This interpolation is performed using Vandermonde matrices. Therefore, the output solution is generally not an exact representation of NekRS's solution, for which the NekRS field files are still required to visualize fully. Because the MOOSE framework supports many different output formats, this is a convenient manner by which to obtain a representation of the NekRS solution in Exodus, VTK, CSV, and other formats.

If volume = true is specified on the NekRSMesh, the output solutions are represented over a volume mesh mirror. Otherwise, if volume = false, the solution is shown only on the boundaries specified with the boundary parameter.

For example, consider a CHT simulation where NekRS is coupled to MOOSE through a boundary. Normally, the NekRSMesh contains only the data that is used to couple NekRS to MOOSE - the NekRS wall temperature and the MOOSE heat flux. The input file below will interpolate the NekRS pressure and velocity solutions onto the mesh mirror. We set volume = true for the mesh mirror so that the pressure and velocity are represented over the volume for visualization. Because setting volume = true normally indicates that you want to couple NekRS to MOOSE by a volumetric heat source (which we don't just for visualization purposes), we set has_heat_source = false so that various error checks related to volume-based coupling to MOOSE are skipped.

For instance, Figure 1 shows the velocity from the NekRS field files (left) and interpolated onto a second-order mesh mirror (right). Because this particular example runs NekRS in a higher order than can be represented on a second-order mesh mirror, the interpolated velocity is clearly not an exact representation of the NekRS solution - only an interpolated version of the NekRS solution.

Figure 1: Velocity from the NekRS field files (left) and after interpolation onto a second order mesh mirror (right).

Outputting the Scratch Array

This class (and all other NekRS wrappings in Cardinal) allows you to write slots in the nrs->usrwrk scratch space array to NekRS field files. This can be useful for viewing the data sent from MOOSE to NekRS (for problem classes that involve multiphysics), as well as to visualize custom user usage of nrs->usrwrk, such as for fetching a wall distance computation from the Nek5000 backend. To write the scratch space to a field file, set usrwrk_output to an array with each "slot" in the nrs->usrwrk array that you want to write. Then, specify a filename prefix to use to name each field file.

In the example below, the first two "slots" in the nrs->usrwrk array will be written to field files on the same interval that NekRS writes its usual field files. These files will be named aaabrick0.f00001, etc. and cccbrick0.f00001, etc. Based on limitations in how NekRS writes its files, the fields written to these files will all be named temperature when visualized.

Reducing CPU/GPU Data Transfers

As shown in External Solve , for every NekRS time step, data is passed into NekRS (which involves interpolating from a MOOSE mesh to a (usually) higher-order NekRS mesh and then copying this field from host to device) and data is passed out from NekRS (which involves copying this field from device to host and then interpolating from a (usually) higher-order NekRS mesh to a MOOSE mesh). When NekRS is run in standalone mode, data is only copied between the device and host when an output file is written.

If NekRS is run as a sub-application to a master application, and sub-cycling is used, a lot of these interpolations and Central Processing Unit (CPU)/GPU data transfers can be omitted. When you run a Nek-wrapped input file, timing estimates for these data transfers are printed to the screen. Even for NekRS meshes with a few million elements, these interpolations to/from NekRS typically require only fractions of a second. But for some use cases, you may want to reduce as many of these communciations as possible.

First, let's explain what MOOSE does in the usual master/sub coupling scheme, using boundary coupling with subcycling = true as an example. Suppose you have a master application with a time step size of 1 second, and run NekRS as a sub-application with a time step size of 0.4 seconds that executes at the end of the master application time step. The calculation procedure involves:

Solve the master application from to seconds.

Transfer a variable representing flux (and its integral) from the master application to the NekRS sub-application) at .

Interpolate the flux from the NekRSMesh onto NekRS's GLL points, then normalize it and copy it from host to device.

Run a NekRS time step from to seconds.

Copy the temperature from device to host and then interpolate NekRS's temperature from NekRS's GLL points to the

NekRSMesh.Even though the flux data hasn't changed, and even though the temperature data isn't going to be used by the master application yet,

ExternalProblem::solve()performs data transfers in/out of NekRS for every NekRS time step. Regardless of whether that data has changed or is needed yet by MOOSE, repeat steps 3-5 two times - once for a time step size of 0.4 seconds, and again for a time step size of 0.2 seconds (for the NekRS sub-application to "catch up" to the master application's overall time step length of 1 second.

If NekRS is run with a time step times smaller than its master application, this structuring of ExternalProblem represents unnecessary interpolations and CPU to GPU copies of the flux, and unnecessary GPU to CPU copies of the temperature and interpolations. NekRSProblem contains features that allow you to turn off these extra transfers. However, MOOSE's MultiApp system is designed in such a way that sub-applications know very little about their master applications (and for good reason - such a design is what enables such flexible multiphysics couplings). So, the only way that NekRS can definitively know that a data transfer from a master application is the first data transfer after the flux data has been updated, we monitor the value of a dummy postprocessor sent by the master application to NekRS. In other words, we define a postprocessor in the master application that just has a value of 1.

We define this postprocessor as a Receiver postprocessor, but we won't actually use it to receive anything from other applications. Instead, we set the default value to 1 in order to indicate "true". Then, at the same time that we send new flux values to NekRS, we also pass this postprocessor.

We then receive this postprocessor in the sub-application. This basically means that, when the flux data is new, the NekRS sub-application will receive a value of "true" from the master-application (through the lens of this postprocessor), and send the data into NekRS.

For data transfer out of NekRS, we determine when the temperatue data is ready for use by MOOSE by monitoring how close the sub-application is to the synchronization time to the master application.

All that is required to use this reduced communication feature are to define the dummy postprocessor in the master application, and transfer it to the sub-application. Then, set the following options in NekRSProblem, which here are shown minimizing the communication going in and out of NekRS.

When the interpolate_transfers = true option is used by the TransientMultiApp, MOOSE interpolates the heat flux that gets sent to NekRS for each NekRS time step based on the master application time steps bounding the NekRS step. That is, if MOOSE computes the heat flux at two successive time steps to be and , and NekRS is being advanced to a sub-cycled step , then if interpolate_transfers = true, the avg_flux variable actually is a linear interpolation of the two flux values at the end points of the master application's solve interval, or . Using this "minimal transfer" feature will ignore the fact that MOOSE is interpolating the heat flux.

Flux Normalization

By default, NekRSProblem will lump all "receiving" sidesets in NekRS together for the purpose of flux normalization. For example, suppose we are coupling NekRS to MOOSE heat conduction through two boundaries. Suppose MOOSE heat conduction predicts the following heat flux integrals on each of these boundaries:

MOOSE boundary 1: 100.0 W

MOOSE boundary 2: 200.0 W

When this data gets mapped to NekRS's spectral element mesh, we need to renormalize to ensure that we conserve power. This renormalization is necessary because the NekRS mesh can be entirely different from the MOOSE mesh, and integrating a nodal field on an origin mesh from MOOSE (say, a 1-st order Lagrange interpolation) will give a different integral value than integrating the interpolated nodal values on the receiving NekRS mesh (say, a 7-th order Lagrange interpolation of GLL points). By default, NekRSProblem lumps all receiving sidesets in the NekRS model together for the sake of normalization. For example, suppose that once received on the NekRS mesh and integrated, that the received flux has values of:

NekRS boundary 1: 102.0 W

NekRS boundary 2: 199.0 W

By default, this class will renormalize the NekRS flux in order to match the total MOOSE flux (of 300.0 W) to give:

NekRS boundary 1:

NekRS boundary 2:

The total power entering the flux is preserved, but not the distribution among the sidesets. This is usually a very small error and is an acceptable approximation for many analyses. However, we do also support an option where the heat flux on each sideset is preserved with the conserve_flux_by_sideset option. When setting this parameter to true, the heat flux on each NekRS sideset will instead be evaluated as:

NekRS boundary 1:

NekRS boundary 2:

This option requires using a vector postprocessor (we typically recommend the VectorOfPostprocessors object) to send indiviudal boundary flux values to preserve. This more advanced option cannot be used if:

Your sidesets in the coupled MOOSE App are not individually specified. For instance, if your heat conduction solver has one sideset that maps to two NekRS sidesets, there's no natural way to figure out what the heat flux is in those sub-sidesets to send a correct value to NekRS.

Any nodes are shared among the NekRS sidesets, such as a node on a corner between two sidesets. When we renormalize the NekRS flux, nodes that are present on more than one sideset will get renormalized multiple times, so there is no guarantee that we can enforce the total flux.

Limiting Temperature

For many NekRS simulations, such as those with sharp interior corners, it is often of use to "clip" the temperature to prevent oscillations. The temperature extracted from NekRS can be clipped before being transferred to a coupled MOOSE application by providing either one of both of the min_T and max_T postprocessors. With these specified, the temperature written to the NekRSMesh is adjusted to the range . min_T and max_T should be given in dimensional units.

Input Parameters

- conserve_flux_by_sidesetFalseWhether to conserve the heat flux by individual sideset (as opposed to lumping all sidesets together). Setting this option to true requires syntax changes in the input file to use vector postprocessors, and places restrictions on how the sidesets are set up.

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether to conserve the heat flux by individual sideset (as opposed to lumping all sidesets together). Setting this option to true requires syntax changes in the input file to use vector postprocessors, and places restrictions on how the sidesets are set up.

- constant_interval1Constant interval (in units of number of time steps) with which to synchronize the NekRS solution

Default:1

C++ Type:unsigned int

Unit:(no unit assumed)

Controllable:No

Description:Constant interval (in units of number of time steps) with which to synchronize the NekRS solution

- disable_fld_file_outputFalseWhether to turn off all NekRS field file output writing (for the usual field file output - this does not affect writing the usrwrk with 'usrwrk_output')

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether to turn off all NekRS field file output writing (for the usual field file output - this does not affect writing the usrwrk with 'usrwrk_output')

- first_reserved_usrwrk_slot0Slice (zero-indexed) in nrs->usrwrk where Cardinal will begin reading/writing data; this can be used to shift the usrwrk slots reserved by Cardinal, so that you can use earlier slices for custom purposes

Default:0

C++ Type:unsigned int

Unit:(no unit assumed)

Controllable:No

Description:Slice (zero-indexed) in nrs->usrwrk where Cardinal will begin reading/writing data; this can be used to shift the usrwrk slots reserved by Cardinal, so that you can use earlier slices for custom purposes

- has_heat_sourceTrueWhether a heat source will be applied to the NekRS domain. We allow this to be turned off so that we don't need to add an OCCA source kernel if we know the heat source in the NekRS domain is zero.

Default:True

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether a heat source will be applied to the NekRS domain. We allow this to be turned off so that we don't need to add an OCCA source kernel if we know the heat source in the NekRS domain is zero.

- initial_flux_integral0Initial value to use for the 'flux_integral' postprocessor, to ensure power conservation; this initial value will be overridden once the coupled app executes its transfer of the boundary power into the 'flux_integral' postprocessor. You may want to use this parameter if NekRS runs first, or if you are running NekRS in isolation but still want to apply a heat flux boundary condition via Cardinal. Remember that this parameter is only used to normalize the 'avg_flux' variable, so you will need to populate an initial shape (magnitude is unimportant because it will be normalized by this parameter).

Default:0

C++ Type:double

Unit:(no unit assumed)

Controllable:No

Description:Initial value to use for the 'flux_integral' postprocessor, to ensure power conservation; this initial value will be overridden once the coupled app executes its transfer of the boundary power into the 'flux_integral' postprocessor. You may want to use this parameter if NekRS runs first, or if you are running NekRS in isolation but still want to apply a heat flux boundary condition via Cardinal. Remember that this parameter is only used to normalize the 'avg_flux' variable, so you will need to populate an initial shape (magnitude is unimportant because it will be normalized by this parameter).

- initial_source_integral0Initial value to use for the 'source_integral' postprocessor, to ensure power conservation; this initial value will be overridden once the coupled app executes its transfer of the volumetric power into the 'source_integral' postprocessor. You may want to use this parameter if NekRS runs first, or if you are running NekRS in isolation but still want to apply a power density via Cardinal. Remember that this parameter is only used to normalize the 'heat_source' variable, so you will need to populate an initial shape (magnitude is unimportant because it will be normalized by this parameter).

Default:0

C++ Type:double

Unit:(no unit assumed)

Controllable:No

Description:Initial value to use for the 'source_integral' postprocessor, to ensure power conservation; this initial value will be overridden once the coupled app executes its transfer of the volumetric power into the 'source_integral' postprocessor. You may want to use this parameter if NekRS runs first, or if you are running NekRS in isolation but still want to apply a power density via Cardinal. Remember that this parameter is only used to normalize the 'heat_source' variable, so you will need to populate an initial shape (magnitude is unimportant because it will be normalized by this parameter).

- max_TIf provided, postprocessor used to limit the maximum temperature (in dimensional form) in the nekRS problem

C++ Type:PostprocessorName

Unit:(no unit assumed)

Controllable:No

Description:If provided, postprocessor used to limit the maximum temperature (in dimensional form) in the nekRS problem

- min_TIf provided, postprocessor used to limit the minimum temperature (in dimensional form) in the nekRS problem

C++ Type:PostprocessorName

Unit:(no unit assumed)

Controllable:No

Description:If provided, postprocessor used to limit the minimum temperature (in dimensional form) in the nekRS problem

- n_usrwrk_slots7Number of slots to allocate in nrs->usrwrk to hold fields either related to coupling (which will be populated by Cardinal), or other custom usages, such as a distance-to-wall calculation (which will be populated by the user from the case files)

Default:7

C++ Type:unsigned int

Unit:(no unit assumed)

Controllable:No

Description:Number of slots to allocate in nrs->usrwrk to hold fields either related to coupling (which will be populated by Cardinal), or other custom usages, such as a distance-to-wall calculation (which will be populated by the user from the case files)

- nondimensionalFalseWhether NekRS is solved in non-dimensional form

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether NekRS is solved in non-dimensional form

- normalization_abs_tol1e-08Absolute tolerance for checking if the boundary heat flux and volumetric heat sources sent from MOOSE to NekRS are re-normalized correctly

Default:1e-08

C++ Type:double

Unit:(no unit assumed)

Controllable:No

Description:Absolute tolerance for checking if the boundary heat flux and volumetric heat sources sent from MOOSE to NekRS are re-normalized correctly

- normalization_rel_tol1e-05Absolute tolerance for checking if the boundary heat flux and volumetric heat sources sent from MOOSE to NekRS are re-normalized correctly

Default:1e-05

C++ Type:double

Unit:(no unit assumed)

Controllable:No

Description:Absolute tolerance for checking if the boundary heat flux and volumetric heat sources sent from MOOSE to NekRS are re-normalized correctly

- not_zeroed_tag_vectorsExtra vector tags which the sytem will not zero when other vector tags are zeroed. The outer index is for which nonlinear system the extra tag vectors should be added for

C++ Type:std::vector<std::vector<TagName>>

Unit:(no unit assumed)

Controllable:No

Description:Extra vector tags which the sytem will not zero when other vector tags are zeroed. The outer index is for which nonlinear system the extra tag vectors should be added for

- outputField(s) to output from NekRS onto the mesh mirror

C++ Type:MultiMooseEnum

Unit:(no unit assumed)

Controllable:No

Description:Field(s) to output from NekRS onto the mesh mirror

- regard_general_exceptions_as_errorsFalseIf we catch an exception during residual/Jacobian evaluaton for which we don't have specific handling, immediately error instead of allowing the time step to be cut

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:If we catch an exception during residual/Jacobian evaluaton for which we don't have specific handling, immediately error instead of allowing the time step to be cut

- skip_final_field_fileFalseBy default, we write a NekRS field file on the last time step; set this to true to disable

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:By default, we write a NekRS field file on the last time step; set this to true to disable

- solveTrueWhether or not to actually solve the Nonlinear system. This is handy in the case that all you want to do is execute AuxKernels, Transfers, etc. without actually solving anything

Default:True

C++ Type:bool

Unit:(no unit assumed)

Controllable:Yes

Description:Whether or not to actually solve the Nonlinear system. This is handy in the case that all you want to do is execute AuxKernels, Transfers, etc. without actually solving anything

- synchronization_intervalconstantWhen to synchronize the NekRS solution with the mesh mirror. By default, the NekRS solution is mapped to/receives data from the mesh mirror for every time step.

Default:constant

C++ Type:MooseEnum

Unit:(no unit assumed)

Controllable:No

Description:When to synchronize the NekRS solution with the mesh mirror. By default, the NekRS solution is mapped to/receives data from the mesh mirror for every time step.

- usrwrk_outputUsrwrk slot(s) to output to NekRS field files; this can be used for viewing the quantities passed from MOOSE to NekRS after interpolation to the CFD mesh. Can also be used for any slots in usrwrk that are written by the user, but unused for coupling.

C++ Type:std::vector<unsigned int>

Unit:(no unit assumed)

Controllable:No

Description:Usrwrk slot(s) to output to NekRS field files; this can be used for viewing the quantities passed from MOOSE to NekRS after interpolation to the CFD mesh. Can also be used for any slots in usrwrk that are written by the user, but unused for coupling.

- usrwrk_output_prefixString prefix to use for naming the field file(s); only the first three characters are used in the name based on limitations in NekRS

C++ Type:std::vector<std::string>

Unit:(no unit assumed)

Controllable:No

Description:String prefix to use for naming the field file(s); only the first three characters are used in the name based on limitations in NekRS

- write_fld_filesFalseWhether to write NekRS field file output from Cardinal. If true, this will disable any output writing by NekRS itself, and instead produce output files with names a01...a99pin, b01...b99pin, etc.

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether to write NekRS field file output from Cardinal. If true, this will disable any output writing by NekRS itself, and instead produce output files with names a01...a99pin, b01...b99pin, etc.

Optional Parameters

- allow_initial_conditions_with_restartFalseTrue to allow the user to specify initial conditions when restarting. Initial conditions can override any restarted field

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:True to allow the user to specify initial conditions when restarting. Initial conditions can override any restarted field

- restart_file_baseFile base name used for restart (e.g.

/ or /LATEST to grab the latest file available) C++ Type:FileNameNoExtension

Unit:(no unit assumed)

Controllable:No

Description:File base name used for restart (e.g.

/ or /LATEST to grab the latest file available)

Restart Parameters

- control_tagsAdds user-defined labels for accessing object parameters via control logic.

C++ Type:std::vector<std::string>

Unit:(no unit assumed)

Controllable:No

Description:Adds user-defined labels for accessing object parameters via control logic.

- default_ghostingFalseWhether or not to use libMesh's default amount of algebraic and geometric ghosting

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Whether or not to use libMesh's default amount of algebraic and geometric ghosting

- enableTrueSet the enabled status of the MooseObject.

Default:True

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Set the enabled status of the MooseObject.

Advanced Parameters

- kernel_coverage_block_listList of subdomains for kernel coverage check. The meaning of this list is controlled by the parameter 'kernel_coverage_check' (whether this is the list of subdomains to be checked, not to be checked or not taken into account).

C++ Type:std::vector<SubdomainName>

Unit:(no unit assumed)

Controllable:No

Description:List of subdomains for kernel coverage check. The meaning of this list is controlled by the parameter 'kernel_coverage_check' (whether this is the list of subdomains to be checked, not to be checked or not taken into account).

- material_coverage_block_listList of subdomains for material coverage check. The meaning of this list is controlled by the parameter 'material_coverage_check' (whether this is the list of subdomains to be checked, not to be checked or not taken into account).

C++ Type:std::vector<SubdomainName>

Unit:(no unit assumed)

Controllable:No

Description:List of subdomains for material coverage check. The meaning of this list is controlled by the parameter 'material_coverage_check' (whether this is the list of subdomains to be checked, not to be checked or not taken into account).

- material_coverage_checkTRUEControls, if and how a material subdomain coverage check is performed. With 'TRUE' or 'ON' all subdomains are checked (the default). Setting 'FALSE' or 'OFF' will disable the check for all subdomains. To exclude a predefined set of subdomains 'SKIP_LIST' is to be used, while the subdomains to skip are to be defined in the parameter 'material_coverage_block_list'. To limit the check to a list of subdomains, 'ONLY_LIST' is to be used (again, using the parameter 'material_coverage_block_list').

Default:TRUE

C++ Type:MooseEnum

Unit:(no unit assumed)

Controllable:No

Description:Controls, if and how a material subdomain coverage check is performed. With 'TRUE' or 'ON' all subdomains are checked (the default). Setting 'FALSE' or 'OFF' will disable the check for all subdomains. To exclude a predefined set of subdomains 'SKIP_LIST' is to be used, while the subdomains to skip are to be defined in the parameter 'material_coverage_block_list'. To limit the check to a list of subdomains, 'ONLY_LIST' is to be used (again, using the parameter 'material_coverage_block_list').

Simulation Checks Parameters

- parallel_barrier_messagingFalseDisplays messaging from parallel barrier notifications when executing or transferring to/from Multiapps (default: false)

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Displays messaging from parallel barrier notifications when executing or transferring to/from Multiapps (default: false)

- verbose_multiappsFalseSet to True to enable verbose screen printing related to MultiApps

Default:False

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Set to True to enable verbose screen printing related to MultiApps

- verbose_setupfalseSet to 'true' to have the problem report on any object created. Set to 'extra' to also display all parameters.

Default:false

C++ Type:MooseEnum

Unit:(no unit assumed)

Controllable:No

Description:Set to 'true' to have the problem report on any object created. Set to 'extra' to also display all parameters.

Verbosity Parameters

- show_invalid_solution_consoleTrueSet to true to show the invalid solution occurance summary in console

Default:True

C++ Type:bool

Unit:(no unit assumed)

Controllable:No

Description:Set to true to show the invalid solution occurance summary in console

Solution Validity Control Parameters

Input Files

- (test/tests/cht/sfr_pincell/nek.i)

- (test/tests/postprocessors/dimensionless_numbers/dimensional/ranks.i)

- (test/tests/postprocessors/nek_heat_flux_integral/nek.i)

- (test/tests/postprocessors/nek_volume_integral/nondimensional.i)

- (test/tests/postprocessors/nek_side_extrema/nek.i)

- (test/tests/nek_warnings/no_temp_solve/nek.i)

- (test/tests/conduction/identical_interface/pyramid/nek.i)

- (test/tests/cht/nondimensional/nek_exact.i)

- (test/tests/nek_errors/mpi_setup_multiple_inputs/nek.i)

- (test/tests/postprocessors/nek_volume_integral/nek.i)

- (test/tests/deformation/simple-cube/nek.i)

- (tutorials/pebble_67/nek.i)

- (test/tests/nek_file_output/nek_as_sub_even/nek.i)

- (test/tests/cht/pincell_p_v/nek.i)

- (test/tests/nek_errors/invalid_settings/boundary_id.i)

- (test/tests/nek_errors/invalid_settings/mesh.i)

- (test/tests/postprocessors/nek_weighted_side_average/nek.i)

- (test/tests/conduction/nonidentical_interface/cylinders/nek.i)

- (test/tests/nek_mesh/sidesets/pyramid/nek_volume.i)

- (test/tests/postprocessors/nek_volume_average/nondimensional.i)

- (test/tests/userobjects/nek_scalar_value/nek.i)

- (test/tests/conduction/zero_flux/mismatch_nek.i)

- (test/tests/sockeye_coupling/sockeye_sub/nek.i)

- (test/tests/nek_file_output/nek_as_sub/nek.i)

- (test/tests/postprocessors/nek_weighted_side_integral/nek.i)

- (test/tests/nek_errors/deformation/nek.i)

- (test/tests/postprocessors/nek_point_value/usrwrk_units.i)

- (test/tests/auxkernels/heat_transfer_coefficient/nek.i)

- (test/tests/nek_output/nek_fld.i)

- (tutorials/sfr_7pin/nek_fluxflux.i)

- (test/tests/conduction/nonidentical_volume/cylinder/nek_exact.i)

- (test/tests/nek_errors/invalid_settings/executioner.i)

- (tutorials/sfr_7pin/nek.i)

- (test/tests/nek_errors/no_occa_source_kernel/nek.i)

- (test/tests/postprocessors/dimensionless_numbers/dimensional/nek.i)

- (test/tests/nek_mesh/first_order/nek.i)

- (test/tests/deformation/vol-areas/nek.i)

- (test/tests/postprocessors/nek_volume_extrema/nek.i)

- (test/tests/nek_errors/used_scratch/nek.i)

- (tutorials/restart_nek_and_moose/read_from_checkpoints/nek.i)

- (test/tests/nek_errors/invalid_bid_postprocessor/nek.i)

- (test/tests/conduction/identical_interface/cube/nek.i)

- (test/tests/nek_mesh/first_order/nek_volume.i)

- (test/tests/nek_errors/deformation/user/nek.i)

- (test/tests/nek_errors/invalid_settings/nek_bc.i)

- (test/tests/cht/sfr_pincell/nek_isolated.i)

- (test/tests/postprocessors/nek_side_integral/nek.i)

- (test/tests/conduction/zero_flux/nek_disjoint.i)

- (test/tests/conduction/identical_volume/cube/nek.i)

- (test/tests/nek_errors/no_temp_var/nek.i)

- (test/tests/nek_temp/second_order/nek_volume.i)

- (test/tests/nek_stochastic/errors/min_gap.i)

- (test/tests/nek_stochastic/errors/nek_error.i)

- (test/tests/nek_mesh/second_order/nek_volume.i)

- (tutorials/sfr_7pin/nek_vpp.i)

- (test/tests/cht/pebble/nek.i)

- (test/tests/nek_errors/invalid_settings/mesh_scaling.i)

- (test/tests/conduction/zero_flux/nek.i)

- (test/tests/conduction/nonidentical_interface/cylinders/nek_exact.i)

- (tutorials/restart_nek_and_moose/create_checkpoints/nek.i)

- (test/tests/multiple_nek_apps/two_channels/nek.i)

- (test/tests/nek_temp/second_order/nek.i)

- (test/tests/conduction/zero_flux/nek_vpp.i)

- (test/tests/nek_errors/insufficient_scratch/nek_mesh.i)

- (test/tests/cht/pebble/shift/nek.i)

- (test/tests/cht/nondimensional/nek.i)

- (test/tests/nek_errors/invalid_transfer_pp/nek.i)

- (test/tests/nek_errors/invalid_settings/timestepper.i)

- (tutorials/msfr/nek.i)

- (tutorials/fhr_reflector/conduction/nek.i)

- (test/tests/conduction/nonidentical_interface/cylinders/nek_mini.i)

- (tutorials/pincell_multiphysics/nek.i)

- (tutorials/gas_compact_multiphysics/nek.i)

- (test/tests/conduction/nonidentical_volume/cylinder/nek.i)

- (test/tests/postprocessors/nek_volume_average/nek.i)

- (test/tests/nek_file_output/nek_as_master/nek.i)

- (test/tests/nek_stochastic/errors/gap.i)

- (test/tests/nek_temp/first_order/nek.i)

- (test/tests/cht/sfr_pincell/nek_vpp.i)

- (tutorials/fhr_reflector/cht/nek.i)

- (test/tests/conduction/nonidentical_volume/nondimensional/nek.i)

- (test/tests/conduction/boundary_and_volume/prism/nek.i)

- (test/tests/postprocessors/nek_side_average/nek.i)

- (test/tests/postprocessors/nek_side_extrema/nek_p.i)

- (test/tests/nek_file_output/nek_as_master_even/nek.i)

- (tutorials/pebble_cht/nek.i)

- (test/tests/nek_errors/insufficient_scratch/nek.i)

- (test/tests/nek_temp/first_order/nek_volume.i)

- (test/tests/conduction/boundary_and_volume/prism/nek_exact.i)

- (doc/content/source/problems/smallest_input.i)

- (test/tests/nek_mesh/second_order/nek.i)

- (test/tests/postprocessors/nek_usrwrk_boundary_integral/nek.i)

- (tutorials/gas_compact_cht/nek.i)

- (tutorials/nek_stochastic/nek.i)

- (test/tests/deformation/mesh-velocity-areas/nek.i)

- (test/tests/griffin_coupling/nek.i)

- (test/tests/multiple_nek_apps/subdirectory/nek.i)