Multiphysics for a TRISO Gas-Cooled Compact

In this tutorial, you will learn how to:

Couple OpenMC, NekRS/THM, and MOOSE together for multiphysics modeling of a TRISO compact

Use two different MultiApp hierarchies to achieve different data transfers

Use triggers to automatically terminate the OpenMC active batches once reaching the desired statistical uncertainty

Automatically detect steady state

To access this tutorial,

This tutorial also requires you to download mesh files and a NekRS restart file from Box. Please download the files from the gas_compact_multiphysics folder here and place these within the same directory structure in tutorials/gas_compact_multiphysics.

This tutorial requires HPC resources to run the NekRS cases. You will be able to run the OpenMC-THM-MOOSE files without any special resources.

In this tutorial, we couple OpenMC to the MOOSE heat transfer module, with fluid feedback provided by either NekRS or THM,

NekRS: wall-resolved - RANS equations

Thermal Hydraulics Module (THM): 1-D area-averaged Navier-Stokes equations

Two different multiapp hierarchies will be used in order to demonstrate the flexibility of the MultiApp system. The same OpenMC model can be used to provide feedback to different combinations of MOOSE applications.

In this tutorial, OpenMC receives temperature feedback from the MOOSE heat conduction module (for the solid regions) and NekRS/THM (for the fluid regions). Density feedback is provided by NekRS/THM for the fluid regions. This tutorial models a partial-height TRISO-fueled unit cell of a prismatic gas reactor assembly, and is a continuation of the conjugate heat transfer tutorial (where we coupled NekRS and MOOSE heat conduction) and the OpenMC-heat conduction tutorial (where we coupled OpenMC and MOOSE heat conduction) for this geometry.

This tutorial was developed with support from the NEAMS Thermal Fluids Center of Excellence and is described in more detail in our journal article Novak et al. (2022).

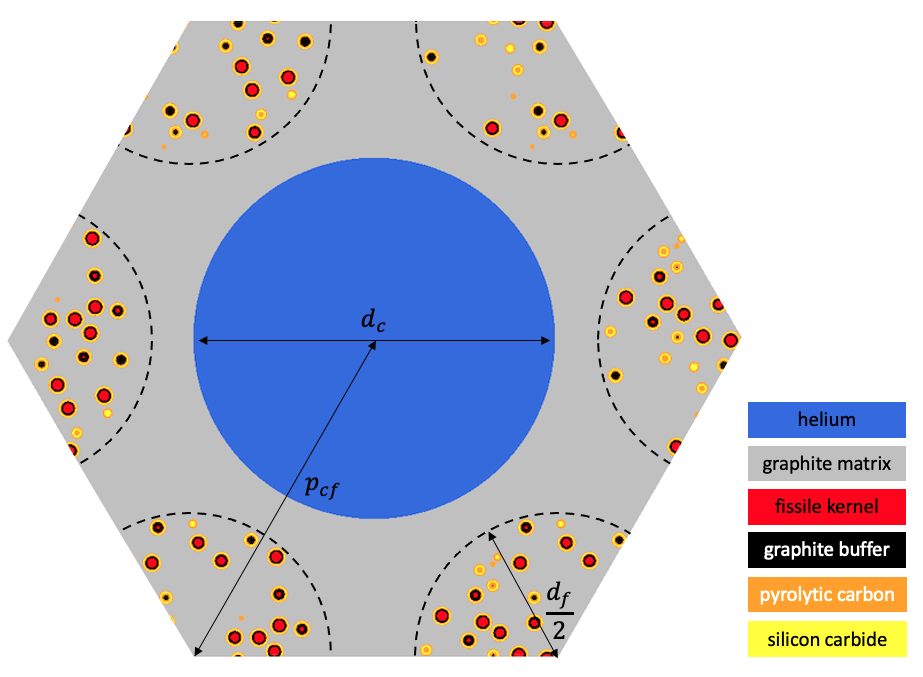

Geometry and Computational Model

The geometry consists of a TRISO-fueled gas reactor compact unit cell INL (2016). A top-down view of the geometry is shown in Figure 1. The fuel is cooled by helium flowing in a cylindrical channel of diameter . Cylindrical fuel compacts containing randomly-dispersed TRISO particles at 15% packing fraction are arranged around the coolant channel in a triangular lattice. The TRISO particles use a conventional design that consists of a central fissile uranium oxycarbide kernel enclosed in a carbon buffer, an inner PyC layer, a silicon carbide layer, and finally an outer PyC layer. The geometric specifications are summarized in Table 1. Heat is produced in the TRISO particles to yield a total power of 38 kW.

Figure 1: TRISO-fueled gas reactor compact unit cell

Table 1: Geometric specifications for a TRISO-fueled gas reactor compact

| Parameter | Value (cm) |

|---|---|

| Coolant channel diameter, | 1.6 |

| Fuel compact diameter, | 1.27 |

| Fuel-to-coolant center distance, | 1.88 |

| Height | 160 |

| TRISO kernel radius | 214.85e-4 |

| Buffer layer radius | 314.85e-4 |

| Inner PyC layer radius | 354.85e-4 |

| Silicon carbide layer radius | 389.85e-4 |

| Outer PyC layer radius | 429.85e-4 |

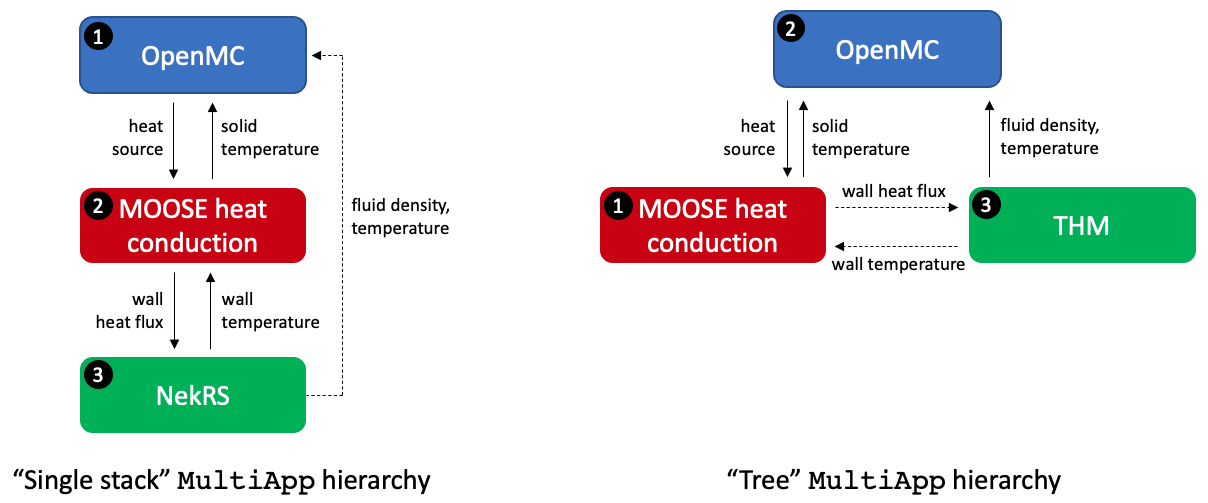

Two different MultiApp hierarchies are used:

A "single stack" design where each application has either a single "parent" application or a single "child" application

A "tree" design where each application has a single "parent" application, but multiple "child" applications

Figure 2 shows a conceptual depiction of these hierarchies. We will describe these in greater detail later, but introduce them here to assist with describing a few aspects of the single-physics models. The circled numbers indicate the order in which the applications run.

Solid lines depict transfers that occur directly from application to application , or between the source and receiver of that field. Dashed lines, on the other hand, depict transfers that do not occur directly between the source and receiver of the field - for instance, in the "single stack" hierarchy, the NekRS application can only communicate data with it's immediate parent application. Therefore, to send fluid density and temperature from NekRS to OpenMC, there are actually two transfers - 1) sending fluid density and temperature from NekRS to the MOOSE heat transfer module, and 2) sending fluid density and temperature from the MOOSE heat transfer module to OpenMC.

Figure 2: MultiApp hierarchies used in this tutorial; data transfers are shown with solid and dashed lines. Solid lines indicate transfers that occur directly from application A to application B, while dashed lines show transfers that have to first pass through an intermediate application to get to the eventual target application.

Conversely, with the "tree" hierarhcy, MOOSE MultiApps communicate with parent/child applications. Therefore, all data communicated between the MOOSE heat transfer module and THM actually has to first pass through their common parent application before reaching the desired target application.

In the time since we originally developed this tutorial, MOOSE has been extended to support sibling transfers, which would allow MOOSE and THM to communicate data directly to one another (in the "tree" hierarchy shown in Figure 2).

OpenMC Model

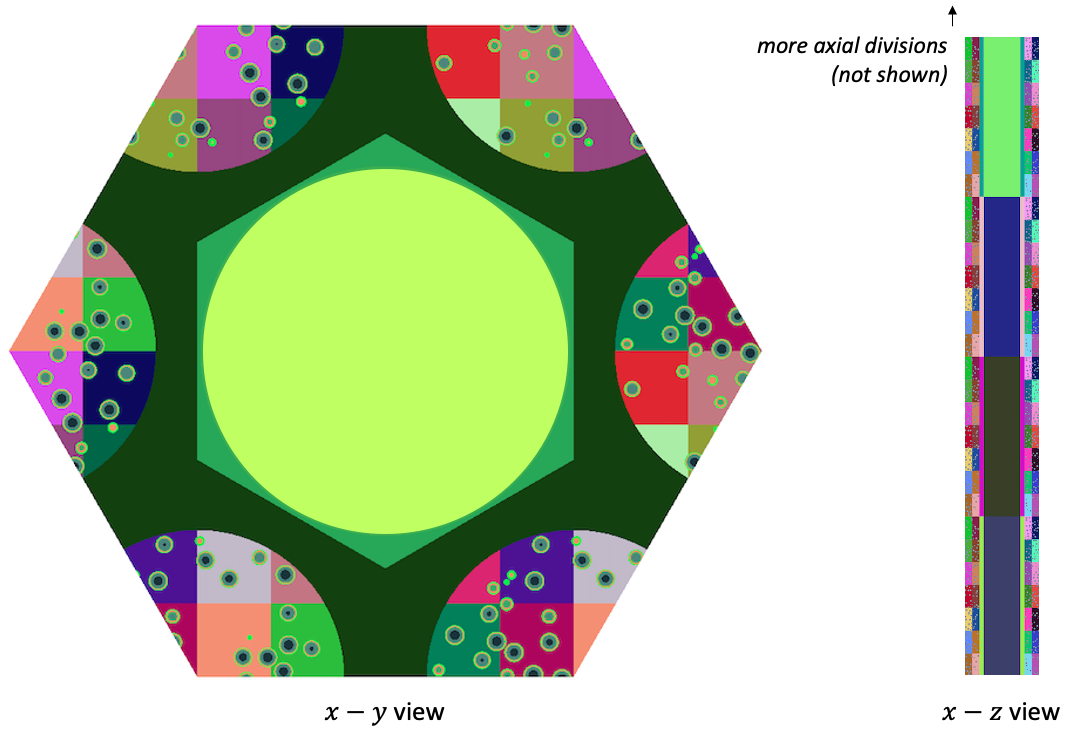

The OpenMC model is built using CSG. The TRISO positions are sampled using the Random Sequential Addition (RSA) algorithm in OpenMC. OpenMC's Python API is used to create the model with the script shown below. First, we define materials. Next, we create a single TRISO particle universe consisting of the five layers of the particle and an infinite extent of graphite filling all other space. We then pack pack uniform-radius spheres into a cylindrical region representing a fuel compact, setting each sphere to be filled with the TRISO universe.

(tutorials/gas_compact_multiphysics/unit_cell.py)Finally, we loop over axial layers and create unique cells for each of the six compacts, the graphite block, and the coolant. Recall that we need unique cells in order for each region to obtain a a unique temperature from MOOSE. The level on which we will apply feedback from MOOSE is set to 1 because each layer is a component in a lattice nested once with respect to the highest level. To accelerate the particle tracking, we:

Repeat the same TRISO universe in each axial layer and within each compact

Superimpose a Cartesian search lattice in the fuel channel regions.

The OpenMC geometry, colored by cell ID, is shown in Figure 3. The lateral faces of the unit cell are periodic, while the top and bottom boundaries are vacuum. The Cartesian search lattice in the fuel compact regions is also visible.

Figure 3: OpenMC model, colored by cell ID

For the "single-stack" MultiApp hierarchy, OpenMC runs first, so the initial temperature is set to uniform in the radial direction and given by a linear variation between the inlet and outlet fluid temperatures. The fluid density is then set using the ideal gas EOS with pressure taken as the fixed outlet of 7.1 MPa given the temperature, i.e. . For the "tree" MultiApp hierarchy, OpenMC instead runs after the MOOSE heat transfer module, but before THM. For this structure, initial conditions are only required for fluid temperature and density, which are taken as the same initial conditions as for the "single-stack" case.

To create the XML files required to run OpenMC, run the script:

You can also use the XML files checked in to the tutorials/gas_compact_multiphysics directory.

Heat Conduction Model

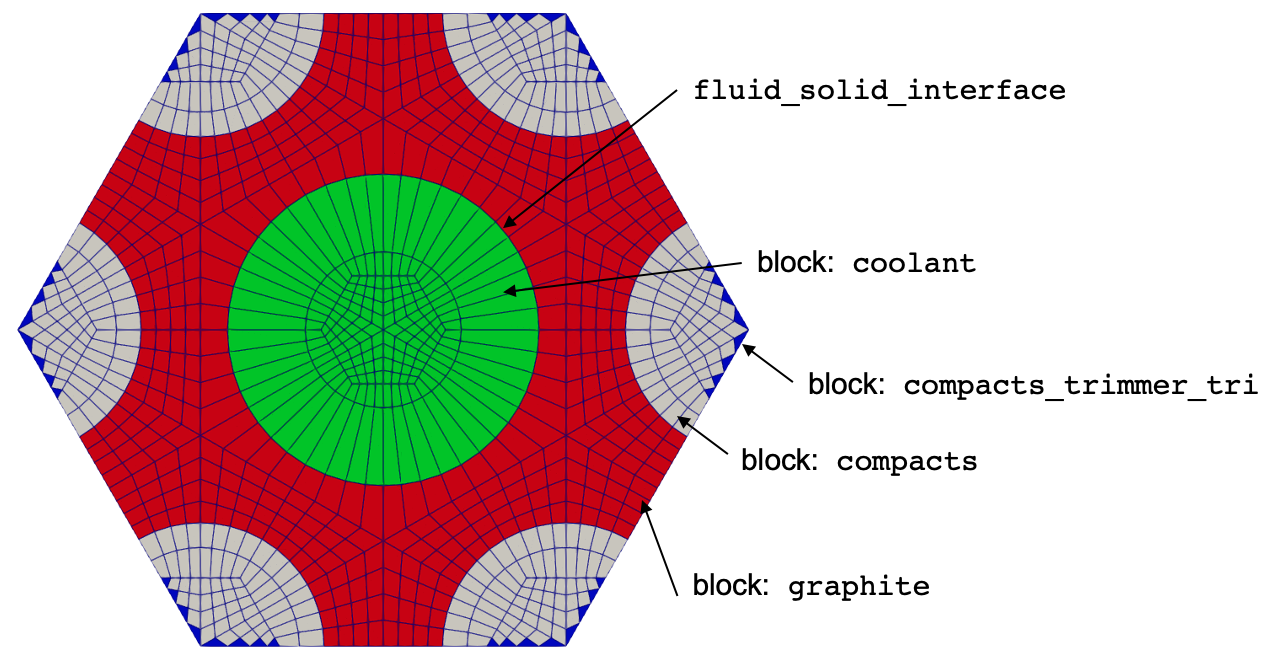

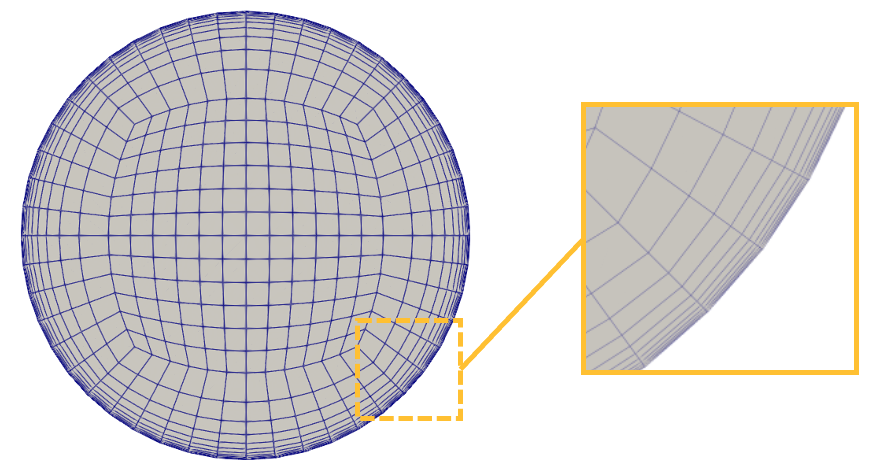

The MOOSE heat transfer module is used to solve for energy conservation in the solid. The solid mesh is shown in Figure 4; the only sideset defined in the domain is the coolant channel surface. The solid geometry uses a length unit of meters.

Figure 4: Mesh for the solid heat conduction model

This mesh is generated using MOOSE mesh generators in the solid_mesh.i file.

We first create a full 7-pin bundle, and then apply a trimming operation to split the compacts. Because MOOSE does not support multiple element types (e.g. tets, hexes) on the same block ID, the trimmer automatically creates an additional block (compacts_trimmer_tri) to represent the triangular prism elements formed in the compacts. Note that within this mesh, we include the fluid region - for the "single stack" MultiApp hierarchy, we will need somewhere for NekRS to write the fluid temperature solution. So, while this block does not participate in the solid solve, we include it in the mesh just for data transfers. You can generate this mesh by running

which will create the mesh, named solid_mesh_in.e.

On the coolant channel surface, a Dirichlet temperature is provided by NekRS/THM. All other boundaries are insulated. The volumetric power density is provided by OpenMC, with normalization to ensure the total specified power. When using the "single stack" hierarchy, MOOSE runs after OpenMC but before NekRS, and an initial condition is only required for the wall temperature, which is set to a linear variation from inlet to outlet fluid temperature. When using the "tree" hierarchy, MOOSE runs first, in which case the initial wall temperature is taken as the same linear variation, while the power is taken as uniform.

NekRS Model

NekRS is used to solve the incompressible k-tau RANS model. The inlet mass flowrate is 0.0905 kg/s; with the channel diameter of 1.6 cm and material properties of helium, this results in a Reynolds number of 223214 and a Prandtl number of 0.655. This highly-turbulent flow results in extremely thin momentum and thermal boundary layers on the no-slip surfaces forming the periphery of the coolant channel. In order to resolve the near-wall behavior with a wall-resolved model, an extremely fine mesh is required in the NekRS simulation. To accelerate the overall coupled solve that is of interest in this tutorial, the NekRS model is split into a series of calculations:

We first run a partial-height, periodic flow-only case to obtain converged , , and distributions.

Then, we extrapolate the and to the full-height case.

We use the converged, full-height and distributions to transport a temperature passive scalar in a CHT calculation with MOOSE.

Finally, we use the converged CHT case as an initial condition for the multiphysics simulation with OpenMC and MOOSE feedback.

Steps 1-3 were performed in an earlier tutorial - for brevity, we skip repeating the discussion of steps 1-3.

For the multiphysics case, we will load the restart file produced from step 3, compute from the loaded solutions for and , and then transport temperature with coupling to MOOSE heat conduction and OpenMC particle transport. Let's now describe the NekRS input files needed for the passive scalar solve:

ranstube.re2: NekRS meshranstube.par: High-level settings for the solver, boundary condition mappings to sidesets, and the equations to solveranstube.udf: User-defined C++ functions for on-line postprocessing and model setupranstube.oudf: User-defined OCCA kernels for boundary conditions and source terms

A detailed description of all of the available parameters, settings, and use cases for these input files is available on the NekRS documentation website. Because the purpose of this analysis is to demonstrate Cardinal's capabilities, only the aspects of NekRS required to understand the present case will be covered. First, the NekRS mesh is shown in Figure 5. Boundary 1 is the inlet, boundary 2 is the outlet, and boundary 3 is the wall. The same mesh was used for the periodic flow solve, except with a shorter height.

Figure 5: Mesh for the NekRS RANS model

Next, the .par file contains problem setup information. This input sets up a nondimensional passive scalar solution, loading , , , and from a restart file. We "freeze" the flow by setting solver = none in the [VELOCITY], [SCALAR01] ( passive scalar), and [SCALAR02] ( passive scalar) blocks. In the nondimensional formulation, the "viscosity" becomes , where is the Reynolds number, while the "thermal conductivity" becomes , where is the Peclet number. These nondimensional numbers are used to set various diffusion coefficients in the governing equations with syntax like -223214, which is equivalent in NekRS syntax to . The only equation that NekRS will solve is for temperature.

Next, the .udf file is used to setup initial conditions and define how should be computed based on and the restart values of and . In turbulent_props, a user-defined function, we use from the input file in combination with the and (read from the restart file later in the .udf file) to adjust the total diffusion coefficient on temperature to according to the turbulent Prandtl number definition. This adjustment must happen on device, in a new GPU kernel we name scalarScaledAddKernel. This kernel will be defined in the .oudf file; we instruct the JIT compilation to compile this new kernel by calling udfBuildKernel.

Then, in UDF_Setup we store the value of computed in the restart file.

In the .oudf file, we define boundary conditions for temperature and also the form of the scalarScaledAdd kernel that we use to compute . The inlet boundary is set to a temperature of 0 (a dimensional temperature of ), while the fluid-solid interface will receive a heat flux from MOOSE.

For this tutorial, NekRS runs last in the "single-stack" MultiApp hierarchy, so no initial conditions are required aside from the , , and taken from the converged_cht.fld restart file on Box.

THM Model

THM is used to solve the 1-D area-averaged Navier-Stokes equations. The THM mesh contains 150 elements; the mesh is constucted automatically within THM. The fluid geometry uses a length unit of meters. The heat flux imposed in the THM elements is obtained by area averaging the heat flux from the heat conduction model in 150 layers along the fluid-solid interface. For the reverse transfer, the wall temperature sent to MOOSE heat conduction is set to a uniform value along the fluid-solid interface according to a nearest-node mapping to the THM elements.

For this tutorial, THM runs last in the "tree" MultiApp hierarchy; because THM solves time-dependent equations, initial conditions are only required for the solution variables for which THM solves - pressure, fluid temperature, and velocity, all of which are set to uniform conditions.

Multiphysics Coupling

In this section, OpenMC, NekRS/THM, and MOOSE heat conduction are coupled for multiphysics modeling of the TRISO gas compact. Two separate simulations are performed here:

Coupling of OpenMC, NekRS, and MOOSE heat conduction in a "single-stack" MultiApp hierarchy

Coupling of OpenMC, THM, and MOOSE heat conduction in a "tree" MultiApp hierarchy

By individually describing the two setups, you will understand the customizability of the MultiApp system and the flexibility shared by all MOOSE applications for seamlessly exchanging tools of varying resolution for one another.

OpenMC-NekRS-MOOSE

In this section, we describe the coupling of OpenMC, NekRS, and MOOSE in the "single-stack" MultiApp hierarchy shown in Figure 2.

OpenMC Input Files

The neutronics physics is solved over the entire domain with OpenMC. The OpenMC wrapping used for the OpenMC-NekRS-MOOSE coupling is described in the openmc_nek.i input file. We begin by defining a number of constants and by setting up the mesh mirror on which OpenMC will receive temperature and density from THM-MOOSE, and on which OpenMC will write the fission heat source. Because the coupled applications use length units of meters, the mesh mirror must also be in units of meters. For simplicity, we just use the same mesh as generated with solid_mesh.i earlier, though this it not necessary.

Next, we define a number of auxiliary variables to query the OpenMC solution and set up multiphysics coupling. We add cell_temperature and cell_density in order to read the cell temperatures and densities directly from OpenMC in order to visualize the temperatures and densities ultimately applied to OpenMC's cells. In order to compute density using the ideal gas EOS given a temperature and a fixed pressure, we use a FluidDensityAux to set density.

Next, we define the necessary initial conditions using functions; all temperatures (nek_temp and solid_temp are to be discussed shortly) are set to a linear variation from the inlet to outlet fluid temperatures.

The wrapping of OpenMC is specified in the [Problem] block and the addition of tallies is done in the [Tallies] block. Here, we indicate that we will provide both temperature and density feedback to OpenMC. In order to visualize the tally standard deviation, we output the fission tally standard deviation using the output parameter. The heat source from OpenMC will be relaxed using Robbins-Monro relaxation.

By default, OpenMC will try to read temperature from the temp variable. However, in this case we have multiple applications (NekRS/THM for the fluids, MOOSE for the solids) which want to send temperatures into OpenMC. To be sure one data transfer does not overwrite the second, we need to tell OpenMC the names of the variables to read temperature from. Cardinal contains convenient syntax to automatically set up the necessary receiver variables for temperature, by using the temperature_variables and temperature_blocks parameters.

Finally, a number of "triggers" are used to automatically terminate OpenMC's active batches once reaching desired uncertainties in and the fission tally. The number of batches here is terminated once both of the following are satisfied:

Standard deviation in is less than 75 pcm

Maximum fission tally relative error is less than 1%

These criteria are checked every batch_interval, up to a maximum number of batches.

Next, we define a transient executioner - while OpenMC is technically solving a steady -eigenvalue problem, using a time-dependent executioner with the notion of a "time step" will allow us to control the frequency with which OpenMC sends data to/from it's sub-app (MOOSE heat conduction). Here, we set the time step to be 1000 times the NekRS fluid time step. We will terminate the solution once reaching the specified steady_state_tolerance, which by setting check_aux = true will terminate the entire solution once reaching less than 1% change in all auxiliary variables in the OpenMC input file (which includes temperatures and power).

Next, we define the MOOSE heat conduction sub-application and data transfers to/from that application. Most important to note is that while the MOOSE heat transfer module does not itself compute fluid temperature, we see a transfer getting the fluid temperature that has been transferred to the MOOSE heat transfer module by the doubly-nested NekRS sub-application.

(tutorials/gas_compact_multiphysics/openmc_nek.i)Next, we define several postprocessors for querying the solution. The heat_source postprocessor will be used to ensure conservation of power when sent to the MOOSE heat conduction application. All other postprocessors are used for general solution monitoring purposes.

For postprocessing, we also compute the average power distribution in a number of layers using a LayeredAverage and output to CSV using a SpatialUserObjectVectorPostprocessor, in combination with a CSV output.

(tutorials/gas_compact_multiphysics/openmc_nek.i)Solid Input Files

The solid heat conduction physics is solved over the solid regions of the unit cell using the MOOSE heat transfer module. The input file for this portion of the physics is the solid_nek.i input. We begin by defining a number of constants and by setting up the mesh for solving heat conduction.

Because we have a block in the problem that we don't need to define any material properties on, we technically need to turn off a material coverage check, or else we're going to get an error from MOOSE. FEProblem is just the default problem, which we need to list in order to turn off the material coverage check.

(tutorials/gas_compact_multiphysics/solid_nek.i)Next, we define the nonlinear variable that this application will solve for (T), the solid temperature. On the solid blocks, we solve the heat equation, but on the fluid blocks that exist exclusively for transferring data, we add a NullKernel to skip the solve in those regions. On the channel wall, the temperature from NekRS is applied as a Dirichlet condition.

Next, we define a number of functions to set the solid material properties and define an initial condition for the wall temperature. The solid material properties are then applied with a HeatConductionMaterial.

(tutorials/gas_compact_multiphysics/solid_nek.i)Next, we define a number of auxiliary variables - power will receive the heat source from OpenMC, nek_temp will receive the wall temperature from NekRS, nek_bulk_temp will receive the volumetric fluid temperature from NekRS, and finally flux will be used to compute the wall heat flux to send to NekRS.

We then add a NekRS sub-application and define the transfers to/from NekRS, including the transfer of fluid temperature from the NekRS volume to the dummy coolant blocks in the MOOSE solid model. Because the NekRS mesh mirror will be a volume mirror (in order to extract volumetric temperatures for the neutronics feedback), a significant cost savings can be obtained by using the "minimal transfer" feature of NekRSProblem (which requires sending a dummy Receiver postprocessor, here named synchronization_to_nek, to indicate when data is to be exchanged). For more information, please consult the documentation for NekRSProblem.

We add several postprocessors to facilitate the data transfers as well as to query the solution.

(tutorials/gas_compact_multiphysics/solid_nek.i)We add a transient executioner - again, even though the MOOSE heat conduction module in this tutorial solves steady equations, a transient executioner allows us to control the frequency with which MOOSE and NekRS iterate the CHT physics. Here, we use a time step that is 50 times bigger than the NekRS time step.

(tutorials/gas_compact_multiphysics/solid_nek.i)The ability to use different data transfer "frequencies" is a key advantage of the "single-stack" MultiApp hierarchy. So far, we have shown that OpenMC will exchange data every 1000 time steps with MOOSE, but MOOSE exchanges data every 50 times steps with NekRS. In other words, for every update of the OpenMC fission distribution, we perform 20 sub-iterations of the CHT physics. Depending on the problem, begin able to iterate the thermal-fluid physics on a finer granularity than the neutronics feedback can be essential to obtaining a stable solution without an inordinately high number of Monte Carlo solves.

Finally, we add a number of LayeredAverage user objects to compute averages of the fuel and graphite temperatures in the axial direction, which are output to CSV using a SpatialUserObjectVectorPostprocessor, in combination with a CSV output.

(tutorials/gas_compact_multiphysics/solid_nek.i)Fluid Input Files

The fluid mass, momentum, and energy transport physics are solved using NekRS. The input file for this portion of the physics is the nek.i input. We begin by defining a number of constants and by setting up the NekRSMesh mesh mirror. Because we are coupling via boundary CHT to MOOSE, we set boundary = '3' so that we will be able to extract the boundary temperature from boundary 3 (the wall). We are also coupling via volumes to OpenMC higher in the MultiApp hierarchy. In order to extract volume representations of the fluid temperature, we also set volume = true.

The bulk of the NekRS wrapping occurs in the [Problem] block with NekRSProblem. The NekRS input files are in non-dimensional form, whereas all other coupled applications use dimensional units. The various *_ref and *_0 parameters define the characteristic scales that were used to non-dimensionalize the NekRS input. In order to simplify the input file, we know a priori that OpenMC will not be sending a heat source to NekRS, so we set has_heat_source = false so that we don't need to add a dummy heat source kernel to the ranstube.oudf file. Finally, we indicate that we will be minimizing the data transfers in/out of NekRS unless new data is actually available from the MOOSE heat transfer module with the synchronization_interval = parent_app parameter.

Next, we will allow NekRS to select its own time step using the NekTimeStepper, combined with a transient executioner.

(tutorials/gas_compact_multiphysics/nek.i)We also add a number of postprocessors to query the Nek solution.

(tutorials/gas_compact_multiphysics/nek.i)Finally, we define the output formats and hide the automatically-created flux_integral and transfer_in postprocessors from the screen (console) to have neater output.

OpenMC-THM-MOOSE

In this section, we describe the coupling of OpenMC, THM, and MOOSE in the "tree" MultiApp hierarchy shown in Figure 2. For the most part, the OpenMC and MOOSE heat conduction input files only have small differences from those presented earlier for the OpenMC-NekRS-MOOSE coupling. Therefore, for the OpenMC and MOOSE heat conduction cases, we only point out the aspects that differ from the earlier presentation. Differences will exist in several areas:

Due to the use of the "tree" MultiApp hierarchy, different initial conditions are required because the applications execute in a different order

Due to the use of the "tree" MultiApp hierarhcy, the wall heat flux and wall temperature exchanged between MOOSE and THM will need to go "up a level" to their common parent application, while the thermal-fluid application can now directly send fluid temperature to OpenMC

THM requires a slightly different data transfer than NekRS due to its 1-D representation of the flow channels, which requires us to compute an average of the wall heat flux along the wall channel

OpenMC Input Files

The neutronics physics is solved over the entire domain with OpenMC. The OpenMC wrapping used for the OpenMC-THM-MOOSE coupling is described in the openm_thm.i input file. Relative to the OpenMC input files used for the OpenMC-NekRS-MOOSE coupling shown previously, we now need to add variables to hold the wall temperature, thm_temp_wall, that THM passes to the MOOSE heat transfer module, and the wall heat flux, flux, that the MOOSE heat transfer module passes to THM. Both of these are simply receiver variables that are written into by the two sub-applications (MOOSE heat conduction and THM).

Next, when using the "tree" MultiApp hierarchy, OpenMC runs after the MOOSE heat transfer module, but before THM. Therefore, initial conditions are required for the fluid temperature (for OpenMC) as well as for the fluid wall temperature (which will be sent to the MOOSE heat transfer module on the first time step). Because OpenMC sends its heat source to the MOOSE heat transfer module at the beginning of a time step, we also need to set an initial condition on the heat source.

(tutorials/gas_compact_multiphysics/openmc_thm.i)The [Problem] block is identical to that shown earlier for the OpenMC-NekRS-MOOSE coupling. Next, we define the two sub-applications and the transfers. The transfers of wall heat flux and wall temperature between MOOSE and THM first pass up through OpenMC to one of the sub-applications, giving four additional transfers in the main application's input file than we saw for the "single-stack" hierarchy.

The advantage of the "tree" MultiApp hierarhcy is in the simpler solid input file that we will see in Solid Input Files , which will not need to have a dummy part of the mesh for the fluid region or any NullKernels.

Finally, because we are coupling to THM, we need to average the wall heat flux around the coolant channel into a number of layers to transfer from the 3-D MOOSE heat conduction model to the 1-D fluid flow model. Heat flux is averaged using a LayeredSideAverage.

(tutorials/gas_compact_multiphysics/openmc_thm.i)All other aspects of the input file are the same as for the OpenMC-NekRS-MOOSE case.

Solid Input Files

The solid heat conduction input file is the solid_thm.i input. This input file is identical to the solid_nek.i input file for the OpenMC-NekRS-MOOSE case except for:

There are no MultiApps, and therefore no transfers, to a thermal-fluid code

There are no initial conditions in the input file, since OpenMC sends the MOOSE heat conduction file all necessary initial conditions for wall temperature and heat source

There is no need to explicitly turn off the material coverage check or add a NullKernel because there is not a dummy fluid block to receive fluid temperature from a sub-application

Because all of these differences are simply omissions, this concludes the discussion of the solid input file. For reference, the full file is below.

(tutorials/gas_compact_multiphysics/solid_thm.i)Fluid Input Files

The fluid mass, momentum, and energy transport physics are solved using THM. The input file for this portion of the physics is the thm.i input. The THM input file is built using syntax specific to THM - we will only briefly cover the syntax, and instead refer users to the THM documentation for more information. First, we define a number of constants at the beginning of the file and apply some global settings. We set the initial conditions for pressure, velocity, and temperature and indicate the fluid EOS object using IdealGasFluidProperties.

Next, we define the "components" in the domain. These components essentially consist of the physics equations and boundary conditions solved by THM, but expressed in THM-specific syntax. These components define single-phase flow in a pipe, an inlet mass flowrate boundary condition, an outlet pressure condition, and heat transfer to the pipe wall.

(tutorials/gas_compact_multiphysics/thm.i)Associated with these components are a number of closures, defined as materials. We set up the Churchill correlation for the friction factor and the Dittus-Boelter correlation for the convective heat transfer coefficient. For the Dittus-Boelter correlation, we use a corrected version of the closure (with the leading coefficient changed from 0.023 to 0.021) based on the NekRS simulations described in an earlier tutorial. Additional materials are created to represent dimensionless numbers and other auxiliary terms, such as the wall temperature. As can be seen here, the Material system is not always used to represent quantities traditioanlly thought of as "material properties."

(tutorials/gas_compact_multiphysics/thm.i)THM computes the wall temperature to apply a boundary condition in the MOOSE heat transfer module. To convert the T_wall material into an auxiliary variable, we use the ADMaterialRealAux.

Finally, we set the preconditioner, a Transient executioner, and set an Exodus output. We will run THM to convergence based on a tight steady state relative tolerance of .

(tutorials/gas_compact_multiphysics/thm.i)Execution and Postprocessing

To run the coupled OpenMC-NekRS-MOOSE calculation, run the following:

This will run with 500 MPI processes (you may run with other parallel configurations as needed, but you will find that the NekRS simulation requires HPC resources due to the large mesh). To run the coupled OpenMC-THM-MOOSE calculation, run the following:

which will run with 2 MPI ranks with 36 threads each (again, these parallel resource choices are only examples). When the two simulations have completed, you will have created a number of different output files. For the OpenMC-NekRS-MOOSE calculation:

openmc_nek_out.e, an Exodus file with the OpenMC solutionopenmc_nek_out_bison0.e, an Exodus file with the MOOSE heat conduction solutionopenmc_nek_out_bison0_nek0.e, an Exodus file with the NekRS solutioncsv_nek/*, CSV files with various postprocessors and userobjects

And for the OpenMC-THM-MOOSE calculation:

openmc_thm_out.e, an Exodus file with the OpenMC solutionopenmc_thm_out_bison0.e, an Exodus file with the MOOSE heat conduction solutionopenmc_thm_out_thm0.e, an Exodus file with the THM solutioncsv_thm/*, CSV files with various postprocessors and userobjects

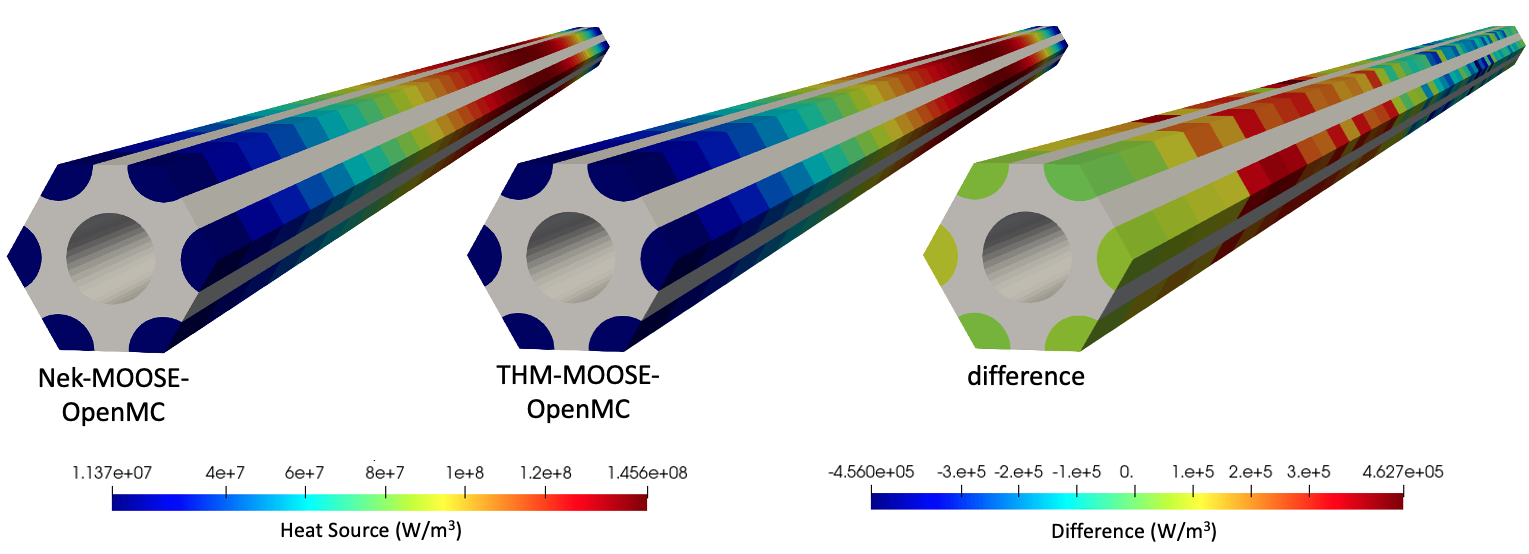

We now briefly present the coupled physics predictions. Figure 6 shows the fission power predicted by OpenMC with thermal-fluid feedback from NekRS-MOOSE and THM-MOOSE. Also shown is the difference between the two predictions (NekRS case minus the THM case). For the six compacts with 50 axial cell layers, the OpenMC model contains a total of 300 tallies; the maximum tally relative error of 1% does introduce some slight asymmetries among the six compacts, which are easiest to discern in the "difference" image in Figure 6.

Figure 6: Heat source predicted by OpenMC with thermal feedback from either NekRS-MOOSE or THM-MOOSE

The lack of reflectors results in a relatively high leakage percentage of about 18.8%. For the unit cell, recall that the fluid temperature rise is only 82 K. These two effects combine to give a power distribution that is very nearly symmetric in the axial direction - while the lower solid temperatures near the inlet do cause power to shift slightly downwards, the magnitude of the shift is moderated by the effect of pushing the fission source closer to external boundaries, where those neutrons would be more likely to exit the domain.

In the earlier conjugate heat transfer tutorial, we showed that CHT calculations with NekRS-MOOSE and THM-MOOSE agree very well with one another in terms of fluid temperature, solid temperature, and fluid density. Especially when considering that the neutronics feedback due to fluid temperature and density are very small for gas-cooled systems, the excellent agreement in OpenMC's fission distribution shown in Figure 6 is as expected. When cast in terms of a percent difference (as opposed to the absolute difference shown in Figure 6), the NekRS-MOOSE-OpenMC and THM-MOOSE-OpenMC cases agree to within 1%, which is within the range of the uncertainty in the fission tally itself.

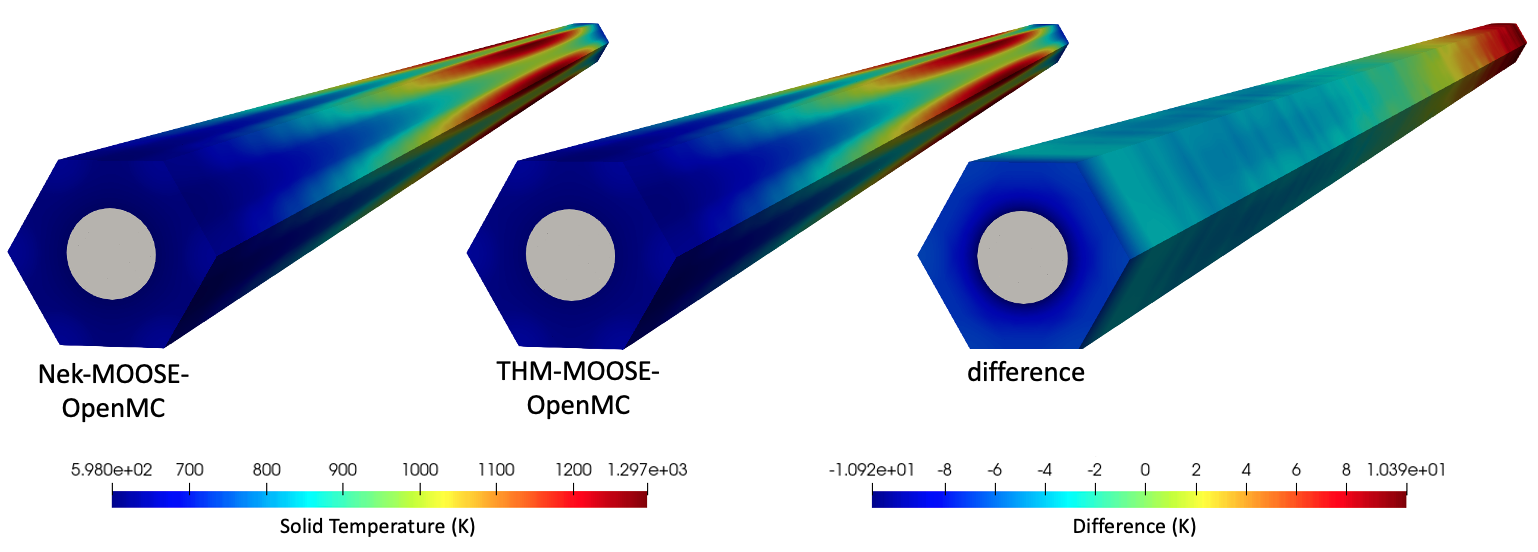

Figure 7 shows the solid temperature predicted by the MOOSE heat transfer module, with physics feedback provided by either NekRS-OpenMC or THM-OpenMC. The solid temperature peaks slightly downstream of the maximum OpenMC power due to the combined effects of the power distribution and convective heat transfer at the wall. Figure 7 helps explain the trend in the power difference shown in Figure 6. Near the inlet, NekRS predicts a lower temperature than THM, while near the outlet NekRS predicts a higher temperature than THM. Due to the negative temperature reactivity coefficient of the unit cell, this causes the NekRS-based coupled model to predict a higher power than THM near the inlet, but a lower power than THM near the outlet.

Figure 7: Solid temperature predicted by the MOOSE heat transfer module with physics feedback from either OpenMC-NekRS or OpenMC-THM.

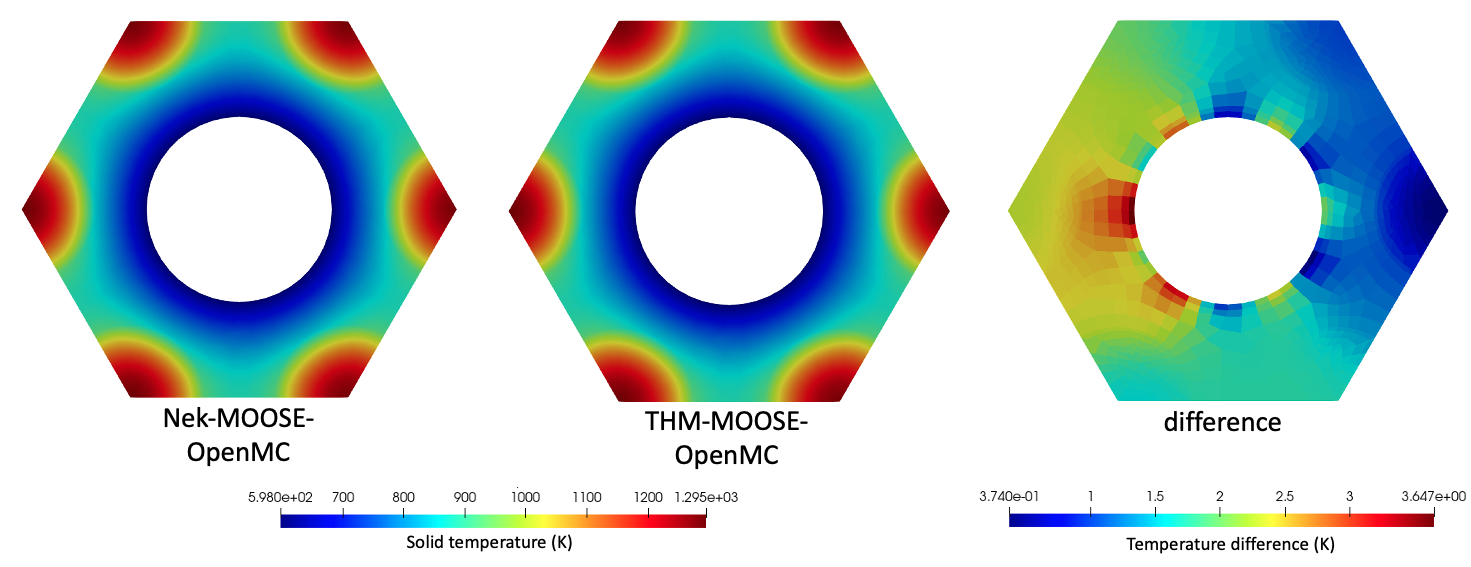

Figure 8 shows the solid temperature predictions on the axial midplane with physics feedback provided by either NekRS-OpenMC or THM-OpenMC. Also shown is the difference between the two (NekRS-based case minus the THM-based case). The THM simulations are unable to capture the radial variation in heat flux along the channel wall, causing THM to underpredict temperatures near the channel wall close to the fuel compacts. However, an additional source of difference is now also present - small radial asymmetries in OpenMC's fission distribution contribute a small asymmetry in solid temperature on the order of 2 K. The tolerance on the fission power uncertainty can simply be increased in order to push down this contribution.

Figure 8: Solid temperature predicted by the MOOSE heat transfer module with physics feedback from either OpenMC-NekRS or OpenMC-THM on the axial mid-plane.

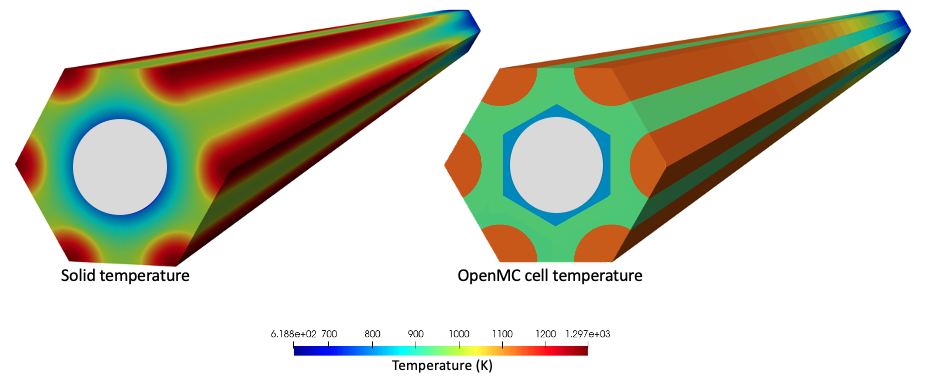

On each axial layer, the OpenMC model receives a total of 14 temperatures (six compacts plus six graphite regions around them, one graphite cell surrounding the coolant channel, and the coolant channel). Figure 9 shows the solid temperature predicted by the NekRS-MOOSE-OpenMC high-resolution simulations along with the actual cell temperature imposed in OpenMC for a half-height slice of the unit cell. OpenMC sets a volume-average temperature for each cell according to the mesh mirror element centroid mappings to OpenMC's cells.

Figure 9: Solid temperature predicted by the MOOSE heat transfer module with physics feedback from OpenMC-NekRS and the solid temperature actually imposed in OpenMC for a half-height portion of the unit cell

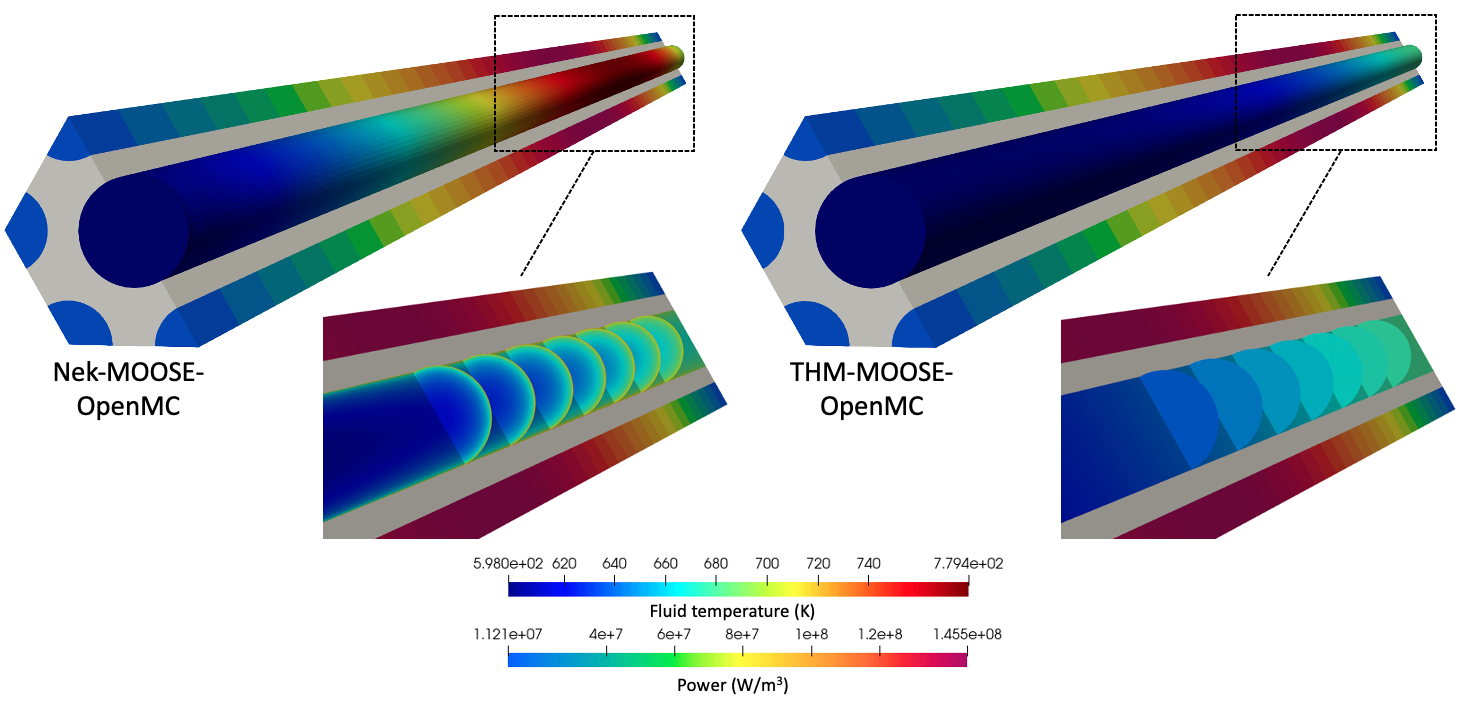

Figure 10 shows the fluid temperature predicted by NekRS and THM, with physics feedback provided by MOOSE-OpenMC. NekRS resolves the thermal boundary layer, whereas the THM model uses the Dittus-Boelter correlation to represent the temperature drop from the heated wall to the bulk. Therefore, the fluid temperature shown in Figure 10 for THM is the area-averaged fluid temperature. The wall temperature predicted by NekRS follows a very similar distribution as the heat flux, with a profile that peaks slightly downstream of the maximum power due to the combined effects of convective heat transfer and the fission power distribution.

Figure 10: Fluid temperature predicted for the multiphysics simulations for NekRS-MOOSE-OpenMC and THM-MOOSE-OpenMC.

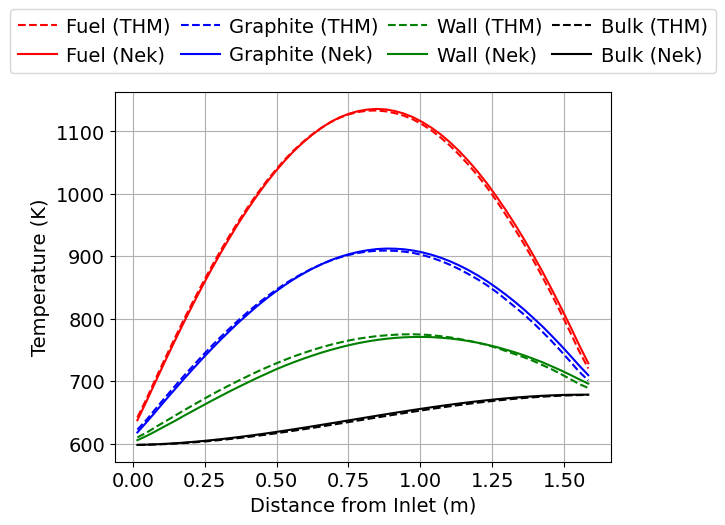

Figure 11 shows the radially-averaged temperatures for the two thermal-fluid feedback options. The fluid bulk temperature increases along the flow direction, with a faster rate of increase where the power density is highest.

Figure 11: Radially-averaged temperatures for the NekRS-MOOSE-OpenMC and THM-MOOSE-OpenMC simulations.

References

- INL.

High-Temperature Gas-Cooled Test Reactor Point Design.

Technical Report INL/EXT-16-38296, Idaho National Laboratory, 2016.

URL: https://tinyurl.com/v9a4hym5.[BibTeX]

- A.J. Novak, D. Andrs, P. Shriwise, J. Fang, H. Yuan, D. Shaver, E. Merzari, P.K. Romano, and R.C. Martineau.

Coupled Monte Carlo and Thermal-Fluid Modeling of High Temperature Gas Reactors Using Cardinal.

Annals of Nuclear Energy, 177:109310, 2022.

doi:10.1016/j.anucene.2022.109310.[BibTeX]